For digital ecosystem builders like us, DrupalCon is kind of like our Super Bowl: best-in-class web devs coming together to level up our Drupal prowess.

Earlier this summer, we joined 1,800 other Drupal users in Pittsburgh, turning the David L. Lawrence Convention Center into a four-day meeting of the minds for anyone who builds with Drupal.

Why DrupalCon? Well, we’re huge fans of the platform. We’ve been developing Drupal projects since 2008 and have served the Drupal community by sponsoring the annual New England Drupal Camp; hosting the monthly Providence Drupal Meetup; developing new modules like Oomph Paragraphs, Ooyala, and Getty Images; and supporting the Talking Drupal Podcast.

That’s why, for us, DrupalCon has become an in-person way to validate the work we’re proud to do online. This year (our fourth!), Oomph sent four team members to host our booth in the Expo Center and soak up the positive energy and game-changing innovation DrupalCon is known for.

Here’s what we saw, what we learned, and what we can’t wait to start applying now that we’re back at work.

Our Notes on DriesNote

“Pittsburgh is a city where things are made. A little like Drupal.”

That’s from this year’s DriesNote, the annual speech Drupal founder Dries Buytaert delivers to kick off DrupalCon and shed light on what he sees as the biggest challenges and opportunities facing developers.

This year’s call to action? Innovate. Think beyond what’s possible to create bigger and better software solutions. Find new and interesting ways to power digital experiences with Drupal. And embrace revolutionary technology like Drupal’s new ChatGPT integration and the soon-to-launch Drupal 10.1.0.

Dries also presented the entrants for the 2023 Pitch-burgh Innovation Contest — an opportunity for Drupal users to win funding for their unique ideas. A panel of judges selected seven finalists, then the audience chose five winners. Drupal awarded a collective $108,000 to fund their submissions.

Watch DriesNote 2023 on YouTube

Session Takeaways

After DriesNote, we squeezed our way into a few (literally and figuratively) jam-packed sessions focused on tech, UX, design, and so much more. Here are the top takeaways from the sessions we attended.

1. The future of Drupal could be headless.

Josh Koenig, CEO and co-founder at Pantheon, led a session about Javascript and the future of Drupal, offering his hot takes on where Drupal has been and where it may need to go to remain successful.

He noted that while Drupal didn’t start out as enterprise software, that’s exactly what it has become. Yet, there’s some work to do for Drupal to seamlessly integrate into the enterprise space.

According to Josh, decoupled and headless CMS solutions are the future of enterprise software, while new developers entering the workforce are focusing more on modern Javascript skills and less on PHP and TWIG. At the same time, current administrator interfaces in the headless space are too rudimentary, decoupled solutions are too complex, and widespread adoption of a single open-source, headless CMS hasn’t happened yet.

These are all gaps Drupal and its users could look to fill.

2. User personas are (mostly) out. Mindset-focused design is in.

“Death to Personas” was the title of one of our favorite sessions, led by Mediacurrent Head of Product Elliott Mower. Now, before we go on, let’s get this out of the way: We like personas at Oomph. And many of the audience members at this session agreed.

Yet, Elliott suggested there’s a different — and, perhaps, better — way to design inclusive web experiences. He believes that user personas are a hangover from the days when software for non-developers was still cutting-edge. Personas can also be hyper-specific or over-generalized, neither of which lend themselves to accessible and impactful user experiences.

After all, your customers’ needs aren’t a number; they’re the product of complex driving forces that can’t be distilled into a set of demographics. Elliot encouraged the audience to instead think qualitatively — specifically about the customer’s mindset — to build more meaningful customer experiences.

Watch the full Death to Personas session on YouTube

3. Authenticity is the foundation for long-lasting client relationships.

In a world where everyone is serving someone — clients, bosses, coworkers — how do you create relationships with staying power? Authenticity.

Palantir.net Customer Success Manager Britany Acre spoke about how the days of emotionless professionalism are over. People want to be humanized, to be seen for the whole person they are both within and outside of the workplace.

Communicating authentically, showing concern, and even sharing when we’re having a bad day can, according to Britany, foster the trust that drives genuine partnership.

Watch the full Authenticity is Contagious session on YouTube

Welcoming Women in Tech

Recent research shows that women make up only 25% of workers in the technology sector. Jess Romeo, Director of Web Publishing Platforms at Pfizer, offered reasons for and solutions to this disparity during her Women in Tech keynote.

Jess said many women wonder if they need a computer science degree and don’t know what opportunities for advancement exist in the field. Many women also feel the pressure to put work before their family — an expectation that’s slowly but surely dying out.

She also offered these words of encouragement for women working toward a career in tech:

- The “rules” of the tech industry have dramatically changed since COVID-19

- Women are welcome in tech, more than ever before

- There has never been more awareness and action on diversity and inclusion

Watch the full Women in Tech keynote on YouTube

Birds of a Feather Sessions

DrupalCon’s Birds of a Feather (BoF) sessions are an opportunity to network and collaborate with like-minded people. These informal gatherings feature open discussions about Drupal-related topics, like technical issues and business challenges.

People love BoF because they’re more of-the-moment, usually organized while DrupalCon is in full swing. This year, organizers accepted ideas in advance, and we appreciated the opportunity to have more structured and thoughtful conversations.

For us, the BoF sessions are also a chance to share our skills and perspective with newer Drupal builders. Because of our involvement in the Drupal Scholarship Program, our lead back-end engineer, Phil, also had the opportunity to mentor up-and-coming engineers during the sessions.

Getting Social

Oomph just wants to have fu-un. (Get the reference?).

It’s true, though! As much as we worked, we played. We took our team out to a Pittsburgh Pirates Monday night ball game and watched the Pirates take home a 5-4 win over the Oakland Athletics.

During what little spare time we had, we also connected with old friends — and made new ones — at the Pantheon and Acquia-hosted special events.

DrupalCon Swag

Did you even go to a conference if you came home without swag?

Our favorite was the plushy Drupal droplets at the Northern Commerce booth, a nod to the Dutch word “droppel,” which translates to “drop” in English and is Drupal’s namesake.

Pantheon also gave away t-shirts (with four different design options) that our team will be repping back at Oomph HQ.

As always, DrupalCon left us energized and excited to continue contributing to the future of web dev. We’re already looking forward to 2024!

THE BRIEF

Connecting People and Planet

NEEF’s website is the gateway that connects its audiences to a vast array of learning experiences – but its existing platform was falling short. The organization needed more visually interesting resources and content, but it also knew its legacy Drupal site couldn’t keep up.

NEEF wanted to build a more powerful platform that could seamlessly:

- Communicate its mission and showcase its impact to inspire potential funders

- Broaden its audience reach through enhanced accessibility, content, and SEO

- Be a valuable resource by providing useful and engaging content, maps, toolkits, and online courses

- Build relationships by engaging users on the front end with easy-to-use content, then seamlessly channeling that data into back-end functionality for user-based tracking

THE APPROACH

Strategy is the foundation for effective digital experiences and the intuitive designs they require. Oomph first honed in on NEEF’s key goals, then implemented a plan to meet them: leveraging existing features that work, adding critical front- and back-end capabilities, and packaging it all in an engaging, user-centric new website.

Information architecture is at the core of user experience (UX). We focused on organizing NEEF’s information to make it more accessible and appealing to its core audiences: educators, conservationists, nonprofits, and partners. Our designers then transformed that strategy into strategic wireframes and dynamic designs, all of which we developed into a custom Drupal site.

The New NEEF: User-Centered Design

A Custom Site To Fuel Connection

NEEF needed a digital platform as unique as its organization, which is why Oomph ultimately delivered a suite of custom components designed to accommodate a variety of content needs.

Engaging and thoughtful design

NEEF’s new user experience is simple and streamlined. Visual cues aid in wayfinding (all Explore pages follow the same hero structure, for example), while imagery, micro-interactions (such as hover effects) and a bold color palette draw the user in. The UX also emphasizes accessibility and inclusivity; the high contrast between the font colors and the background make the website more readable for people with visual impairments, while people with different skin tones can now see themselves represented in NEEF’s new library of 100 custom icons.

Topic-based browsing

From water conservation to climate change, visitors often come to the NEEF site to learn about a specific subject. We overhauled NEEF’s existing site map to include topic-based browsing, with pages that roll resources, storytelling, and NEEF’s impact into one cohesive package. Additional links in the footer also make it easier for specific audiences to find information, such as nonprofits seeking grants or teachers looking for educational materials.

NPLD-hosted resources and event locator

Oomph refreshed existing components and added new ones to support one of NEEF’s flagship programs, National Public Lands Day (NPLD). People interested in hosting an event could use the new components to easily set one up, have their own dashboard to manage, and add their event to NEEF’s event locator. Once the event has passed, it’s automatically unlisted from the locator — but archived so hosts can duplicate and relaunch the event in future years.

THE RESULTS

Protecting the Planet, One User at a Time

Oomph helped NEEF launch its beautiful, engaging, and interactive site in May 2023. Within three months, NEEF’s team had built more than 100 new landing pages using the new component library, furthering its goal to build deeper connections with its audiences.

As NEEF’s digital presence continues to grow, so will its impact — all with the new custom site as its foundation.

Among enterprise-scale organizations, from healthcare to government to higher education, we’ve seen many content owners longing for a faster, easier way to manage content-rich websites. While consumer-level content platforms like Squarespace or Wix make it easy to assemble web pages in minutes, most enterprise-level platforms prioritize content governance, stability, and security over ease of use.

Which is a nice way of saying, sometimes building a new page is as much fun as getting a root canal.

That’s why we’re excited about Site Studio, a robust page-building tool from our partners at Acquia. Site Studio makes content editing on Drupal websites faster and more cost-effective, while making it easy for non-technical users to create beautiful, brand-compliant content.

In this article, we’ll explain what Site Studio is, why you might want it for your next Drupal project, and a few cautions to consider.

What is Site Studio?

Formerly known as Cohesion, Site Studio is a low-code visual site builder for Drupal that makes it easy to create rich, component-based pages without writing code in PHP, HTML, or CSS. Essentially, it’s a more feature-rich alternative to Drupal’s native design tool, Layout Builder.

How does Site Studio work? Site developers lay the groundwork by building a component library and reusable templates with brand-approved design elements, such as hero banners, article cards, photo grids, buttons, layouts, and more. They can either create custom components or customize existing components from a built-in UI kit.

Content editors, marketers, and other non-technical folks can then create content directly in the front end of the website, using a drag-and-drop visual page builder with a full WYSIWYG interface and real-time previews.

Who is Site Studio For?

In our experience, the businesses that benefit most from a powerful tool like Site Studio tend to be enterprise-level organizations with content-rich websites — especially those that own multiple sites, like colleges and universities.

Within those organizations, there are a number of roles that can leverage this tool:

Content owners

With Site Studio, marketers and content editors can browse to any web page they want to update, and edit both the content and settings directly on the page. Rewriting a header, swapping an image with a text box, or rearranging a layout can be done in just seconds.

Site builders

Using Drupal’s site configuration interfaces and Site Studio’s theming tools, site builders can easily create Drupal websites end-to-end, establishing everything from the information architecture to the content editing experience.

Brand managers

Managers can define site wide elements, like headers and footers or page templates, to ensure that an organization’s branding and design preferences are carried out. They can also create sub-brand versions of websites that have unique styles alongside consistent brand elements.

IT and web teams

By putting content creation and updates in the hands of content authors, Site Studio frees up developers to work on more critical projects. In addition, new developers don’t need to have expert-level Drupal theming experience, because Site Studio takes care of the heavy lifting.

What Can You Do With Site Studio?

Site Studio makes it easy to create and manage web content with impressive flexibility, giving content owners greater control over their websites without risking quality or functionality. Here’s how.

Go to market faster.

Site Studio’s low-code nature and library of reusable components (the building blocks of a website) speeds up both site development and content creation. Creators can quickly assemble content-rich pages, while developers can easily synchronize brand styles, components, and templates.

Site Studio provides a UI Kit with around 50 predefined components, like Text, Image, Slider, Accordion, etc… Developers can also build custom components. Change any component in the library, and all instances of that component will update automatically. You can also save layout compositions as reusable ”helpers” to streamline page creation.

Build beautiful pages easily.

While we love the power and versatility of Drupal, its page building function has never been as user-friendly as, say, WordPress. Site Studio’s Visual Page Builder brings the ease of consumer-level platforms to the enterprise website world.

This intuitive, drag-and-drop interface lets users add or rewrite text, update layouts, and change fonts, styles, colors, or images without any technical help. And, it’s easy to create new pages using components or page templates from the asset library.

Ensure brand consistency.

With Site Studio, you can define standards for visual styles and UI elements at the component level. This provides guardrails for both front-end developers and content creators, who draw on the component library to build new pages. In addition, Site Studio’s import and sync capabilities make it easy to enforce brand consistency across multiple sites.

Get the best out of Drupal.

Because Site Studio is designed exclusively for Drupal, it supports many of Drupal’s core features. With Site Studio’s component library, for instance, you can create templates for core content types in Drupal. Site Studio also supports a number of contributed content modules (created by Drupal’s open-source community), so developers can add additional features that are compatible with Site Studio’s interface.

What Are Some Limitations of Using Site Studio?

There’s no doubt Site Studio makes life easier for everyone from marketers to web teams. But there are a few things to consider, in terms of resource costs and potential risks.

Start from the ground up.

To ensure the best experience, Site Studio should be involved in almost all areas of your website. Unlike other contributed modules, it’s not a simple add-on — plan on it being the core of your Drupal site’s architecture.

This will let you make decisions based on how Site Studio prefers a feature to be implemented, rather than bending Drupal to fit your needs (as is often the case). Staying within Site Studio’s guardrails will make development easier and faster.

Be careful with custom components.

With its recent Custom Components feature, Site Studio does let developers create components using their preferred code instead of its low-code tools. So, you can create a level of custom functionality, but you must work within Site Studio’s architecture (and add development time and cost).

If you decide instead that for a given content type, you’re going to sidestep Site Studio and build something custom, you’ll lose access to all its components and templates — not to mention having to manage content in different systems, and pay for the custom development.

Rolling back changes is tough.

A standard Drupal site has two underlying building blocks: database and code. Drupal uses the code (written by developers) to carry out functions with the database.

When a developer changes, say, the HTML code for a blog title, the change happens in the code, not the database. If that change happened to break the page style, you could roll back the change by reverting to the previous code. In addition, most developers test changes first in a sandbox-type environment before deploying them to the live website.

By contrast, with Site Studio, most changes happen exclusively in the database and are deployed via configuration. This presents a few areas of caution:

- Users with the correct permissions can override configuration on a live site, which could impact site functionality.

- Database changes can have far-reaching impacts. If you have to roll back the database to fix a problem, you’ll lose any content changes that were made since the last backup.

That’s why Site Studio requires meticulous QA and careful user permissioning to prevent inadvertent changes that affect site functionality.

One Last Thing: You Still Need Developers

While it’s true that just about anyone in your organization can create pages with Site Studio’s intuitive interface, there are still aspects of building and maintaining a Drupal website that require a developer. Those steps include:

- Setup and implementation of Site Studio,

- Building reusable components and templates, and

- Back-end maintenance (like updates, compliance, and security).

However, once the components have been built, it’s easy for non-technical content owners to create beautiful pages. In the end, you’ll be able to launch websites and pages faster — with the creativity and consistent identity your brand deserves.

Interested in learning whether Site Studio is a good fit for your Drupal website? Contact us for more info.

We are thrilled to share that Oomph has been recognized as an Acquia Certified Drupal Cloud Practice for completing Acquia’s rigorous evaluation program, which recognizes the highest standards of technical delivery on the platform.

To earn Drupal Cloud Practice Certification, Acquia partners must meet a stringent set of technical criteria. These requirements include a core team of Acquia certified developers, significant hands-on experience delivering Acquia Drupal Cloud products to clients, and a meticulous company review with Acquia partner specialists.

“I am incredibly proud that our team has achieved this Acquia Practice Certification” said Christopher Murray, CEO at Oomph. “We have a long history of delivering impactful client solutions around Drupal and Acquia and we are passionate and excited about extending our work within the Acquia ecosystem.”

The Acquia Practice Certification Program rewards partners who demonstrate a mastery of Acquia’s Cloud Platform in three separate areas: Drupal Cloud, Marketing Cloud and DXP. These certifications are awarded to organizations with a proven record of technical achievement, and a commitment to driving transformative business engagements on the Acquia Platform.

As a Certified Drupal Cloud Practice, Oomph receives the benefits of a deeper working relationship with Acquia, and heightened visibility as a trusted technical partner.

“We’re proud to recognize Oomph as a certified Drupal Cloud Practice,” said Mark Royko, Director of Practice Development at Acquia. “At Acquia, we continually strive to serve more customers while helping our valued partners grow their businesses. With Drupal Cloud certification, we know we can count on partners like Oomph to help us reach those goals.”

This honor is one of several Acquia accolades that Oomph has achieved since becoming an Acquia Partner in 2012. This year Oomph won a 2022 Acquia Engage Award for our work designing and building the platform that powers many of the websites operated by the state of Rhode Island.

“These certifications are awarded to organizations with a proven record of technical achievement, and a commitment to driving transformative business engagements on the Acquia Platform. It helps more customers realize the tremendous value of working with Acquia’s Drupal Cloud.”

— Christopher Murray, CEO

We’re always looking to expand our Acquia knowledge to help our partners make the most of their Drupal websites. Our team leads the way on emerging Drupal practices so we can advise our clients how to build innovative websites that help them forge the best connections with their audiences.

As an Acquia Partner we are excited to help you deliver great Drupal experiences. Start by reaching out. Contact us today to connect with an expert!

Ajob well done, as they say, is its own reward. At Oomph, we believe that’s true. But on the other hand, awards are pretty nice, too. And we’ve just won a big one: Oomph was named a winner of a 2022 Engage Award by digital platform experience provider Acquia for our work designing and building the platform that powers many of the websites operated by the state of Rhode Island.

Oomph won Leader of the Pack – Public Sector, in the Doers category, recognizing organizations that are setting the bar for digital experiences in their field or industry.

The award-winning project (see more in this case study) began with the goal of creating a new design system for the public-facing sites of Rhode Island’s government organizations, such as the governor’s office and the Office of Housing and Community Development. Oomph’s work on the sites, which began launching in December 2020, helped Rhode Island improve communications by developing common design elements and styles that appeared consistently across all the websites and by providing each agency the same tools and capabilities to keep their sites up to date and functioning at their best.

We’d Like to Thank…

The appreciation for the project has been especially satisfying for Rhode Island ETSS Web Services Manager Robert W. Martin, who oversaw it on the client side. “We are extremely proud to receive such recognition,” he says. “This accomplishment could not have happened without the public-private partnership between the State of Rhode Island, NIC RI, Oomph, and Acquia. Through the Oomph team’s high level of engagement, we were able to deliver the entire platform on time, on quality, and on budget, all during a global pandemic. A truly remarkable achievement!”

Folks have been excited on the Oomph side as well. “It’s an honor, really, and recognition of an excellent team effort,” says Jack Hartman, head of delivery with Oomph. “We went into this project knowing we were introducing the team to powerful new technologies such as Acquia Site Factory. Our successful delivery of the new platform for Rhode Island is a testament to Acquia feeling that we’ve not only utilized their services, but modeled how they feel their products can best be put to use in bringing value to their clients.”

A Remarkable Collaboration

Martin is quick to credit the project’s success to the Oomph team’s hard work, expertise and commitment to collaboration. He explains: “We were always impressed with Oomph’s breadth of technical knowledge and welcomed their UX expertise. However, what stood out the most to me was the great synergy that our teams developed.”

In keeping with that spirit of synergy, Hartman also is proud of how the teams came together to get the job done. “We’re all quite transparent communicators and effective collaborators. Those two attributes allowed us to successfully navigate the vast number of stakeholders and agency partners that were involved from the beginning to the end,” he says.

Planning for the Unplannable

A particular challenge no one foresaw in the early stages of the project was the COVID-19 pandemic. Though it wasn’t part of the initial strategy, the team quickly realized that due to COVID-19, they needed to change course and focus on COVID-related sites first, which made it possible to tap into COVID relief funding for the project overall.

“Our first site to roll out on the new platform was Rhode Island’s COVID-19 response communication hub,” says Hartman. “It added an extra layer of both seriousness and excitement knowing that we were building a critical resource for the people of Rhode Island as they navigated a really unpredictable pandemic scenario.”

The Future of Digital Infrastructure

Of course, even with the added responsibility and challenge of a global pandemic, this project already had plenty of excitement and investment from the Oomph team. “It’s not often that a team’s full time and focus is secured to work on a single initiative, and this engagement was very much that,” Hartman says. “A group of highly skilled craftspeople with the singular focus of laying the groundwork for the future of a state’s digital infrastructure.”

Of course, all the internal excitement in the world doesn’t count for much if the end product doesn’t land with its audience, and in this case, the new sites were a resounding success. As the sites launched, they quickly met with approval from those who use the sites most: Rhode Island’s government agencies and the state’s citizens.

“Agencies have been extremely enthusiastic and excited for both the design and functionality of their new sites,” Martin says. “Some of the most common points of appreciation included the availability of content authoring and workflow approvals, flexible layout and content components, multiple color themes, support for ‘dark mode’ and other user customizations, multiple language support, system-wide notification alert capability, and a wide range of other features all available without the need for custom coding or advanced technical knowledge.”

According to Martin, the platform has been a hit with Rhode Island’s people as well. He says, “We have received very positive, unsolicited feedback from citizens around the state regarding their new RI State government websites.”

He adds, “Being named the winner of the Leader of the Pack – Public Sector in the 2022 Acquia Engage Awards is proof positive that we’re on track towards achieving our service delivery goals.”

One More Trophy for the Case

Of course, with a big project like this, there’s always plenty to learn. Hartman points to the process of migrating the state’s vast trove of legacy content to the new sites as a key area that will inform future client work.

But enough lessons: for now, the team is celebrating the big win.

“Our fearless leader Chris Murray attended the awards ceremony in Miami,” Hartman says. “We have an awards wall at the office where we display everything we’ve won from design accolades to this prestigious award. I have no doubt the Acquia Award will be there the next time I visit the office.”

And after redesigning the entire web presence for Rhode Island’s many state agencies and completely revamping how the state communicates with its citizens, surely the Oomph team are now honorary members of Rhode Island’s government?

“Absolutely,” Hartman laughs. “And as my first initiative as a member of this great state’s government, I’ll be bringing the PawSox back to the Bucket!”

Interested in learning more about Oomph’s award-winning work? We don’t blame you. View more of our case studies to see how we make a difference for clients nationwide.

A “Read More” Teaser is still a common UX pattern; it contains a title, often a summary, sometimes a date or author info, and, of course, a Read More link. The link text itself may differ — “read more”, “learn more”, “go to article”, and so on — but the function is the same: tell the user that what they see is only a preview, and link them to the full content.

In 2022, I’d argue that “Read More” links on Teaser components are more of a design feature than a functional requirement; they add a visual call to action to a list of Teasers or Cards, but they aren’t necessary for navigation or clarity.

Although I see fewer sites using this pattern recently, it’s still prevalent enough on the web and in UX designs to justify discussing it.

If you build sites with Drupal, you have a few simple options to implement a Read More Teaser. Both methods have some drawbacks, and both result in adjacent and non-descriptive links.

I’ll explore both options in detail and discuss how to resolve accessibility concerns with a little help from TWIG. (Spoiler: TWIG gives us the best markup without any additional modules)

Using Core

Without adding contributed modules or code, Drupal Core gives you a long, rich text field type for storing lengthy content and a summary of that content. It’s a single field that acts like two. In the UI, this field type is called Text (formatted, long, with summary), and it does exactly what the name describes.

Drupal installs two Content Types that use this field type for the Body – Article and Basic Page – but it can be added to any Content Type that needs both a full and a summarized display.

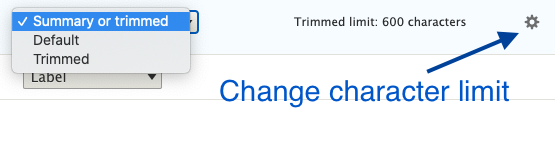

In the Manage Display settings of the Teaser or other summarized view mode, the Summary or Trimmed format is available. The default character limit is 600, but this can be configured higher or lower.

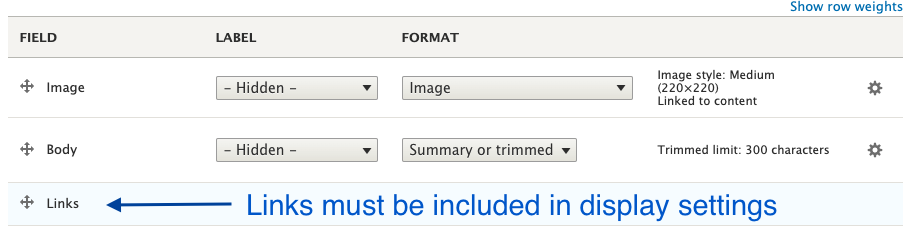

Finally, in the Manage Display settings, the Links field must be added to render the Read More link.

That’s all you need to create a Read More Teaser in just a few minutes with Drupal Core, but this method has some shortcomings.

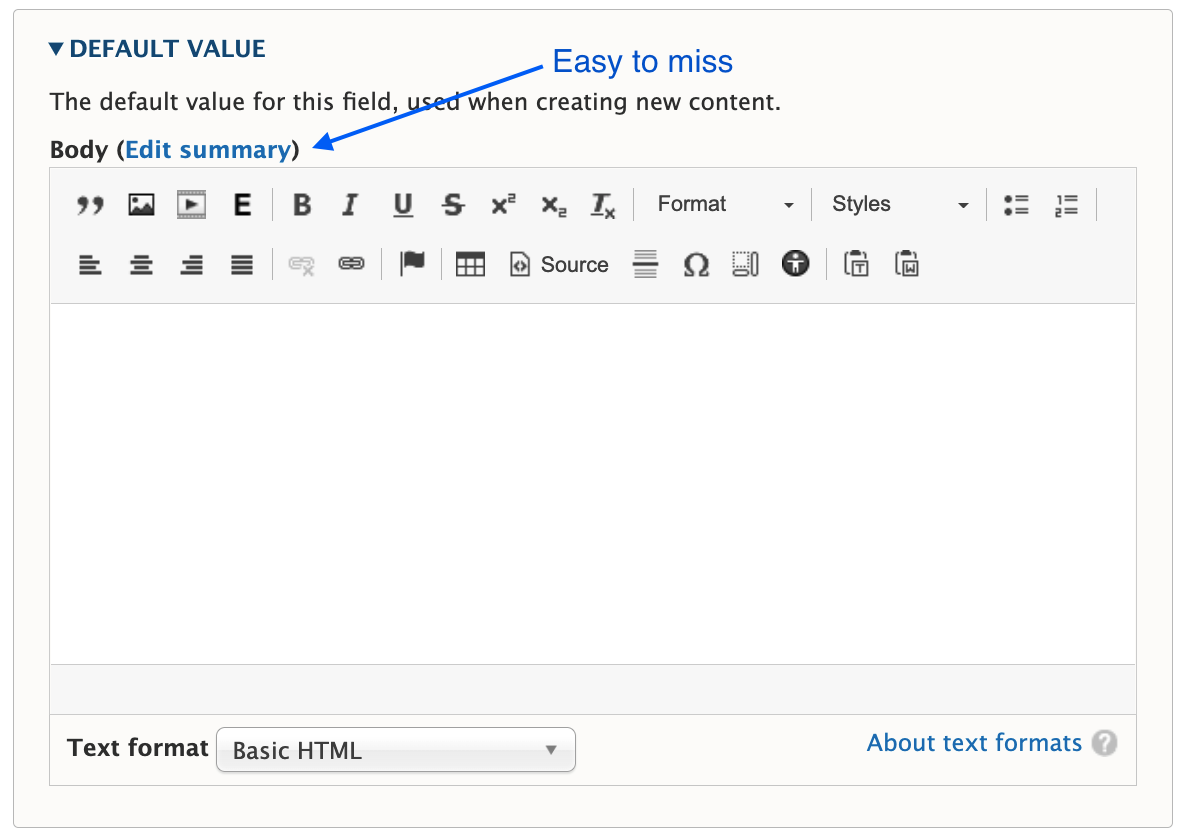

The summary field is easy to miss

Unless you require the summary, it’s often overlooked during content creation. A user has to click the Edit summary link to add a summary, and it’s easy to miss or forget.

You have to choose one or the other format

Notice the word “or” in the Summary or Trimmed format. Out of the gate, you can display what’s in the summary field, in its entirety, or you can display a trimmed version of the Body field. But you can’t display a trimmed version of the summary.

Expect the unexpected when a summary isn’t present

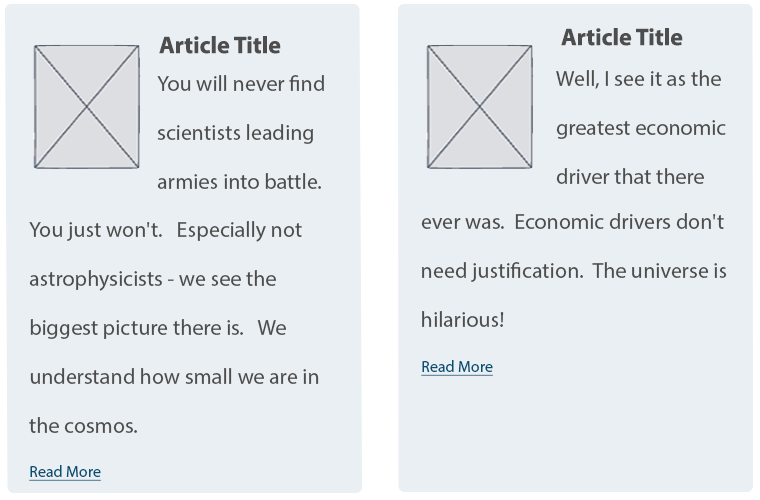

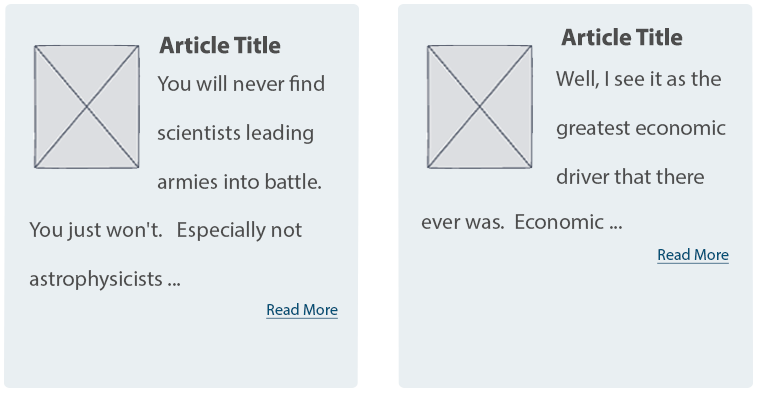

If a summary is not populated, Drupal attempts to trim the Body field to the last full sentence, while staying under the character limit. The problem is the unpredictable nature of content in a rich text field. These may be edge cases, but I’ve seen content editors insert images, headings, dates, and links as the first few elements in the Body field. When the content starts with something other than paragraph text, the Teaser display will, too.

Expect varying length Teasers when a summary is present

Depending on your layout or visual preference, this may not be a concern. But unless you’re judicious about using a standard or similar length for every summary, you’ll have varying length Teasers, which looks awkward when they are displayed side by side.

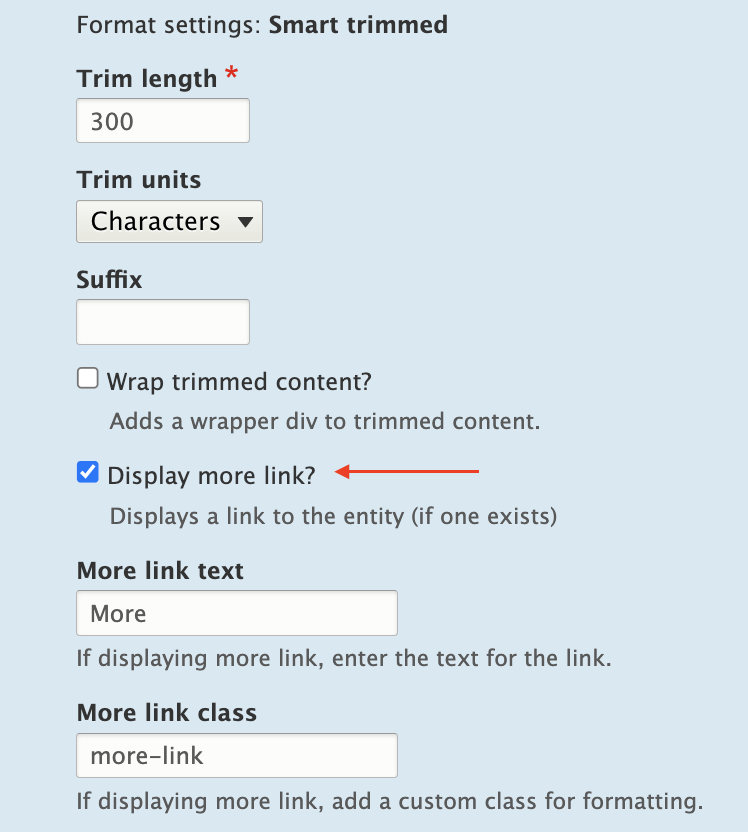

Using the Smart Trim Module

The contributed Smart Trim module expands on Core’s functionality with additional settings to “smartly” trim the field it’s applied to. The Smart Trim module’s description states:

With smart trim, you have control over:

- The trim length

- Whether the trim length is measured in characters or words

- Appending an optional suffix at the trim point

- Displaying an optional “More” link immediately after the trimmed text

- Stripping out HTML tags from the field

I won’t cover all of the features of Smart Trim, because it’s well documented already. Smart Trim solves one of the problems noted earlier: it lets you trim the summary. That said, Smart Trim has some of the same drawbacks as Core’s trim feature, along with some limitations of its own.

Sentences aren’t respected

Unlike Core’s trim feature, Smart Trim’s limits don’t look for full sentences. A character or word limitation stops when it hits the defined limit, even if that limit occurs mid-sentence.

Strip HTML might be helpful, or it might not

If your goal is rendering plain text without any HTML (something you might otherwise have to code in a template), Smart Trim’s Strip HTML setting is exactly what you need.

It’s also helpful for dealing with some of those edge cases where content starts with an element other than paragraph text. For example, if the first element in the Body field is an image, followed by paragraph text, the Strip HTML setting works nicely. It strips the image entirely and displays the text that follows in a trimmed format.

But be aware that Strip HTML also strips paragraph tags. If the first element in the Body field is a heading, followed by paragraph text, all tags are stripped, and everything runs together as one large block of text.

“Immediately after” doesn’t mean immediately after

If you want the Read More link to display immediately after the trimmed text, as the module description suggests, you’ll need to assign a class in the Smart Trim settings with a display other than block.

What about adjacent link and accessibility issues?

If you create a Read More Teaser using either the Core or Smart Trim methods described here, there are a couple of problems.

Either method results in markup something like this:

HTML

<article>

<h2>

<a href="/article/how-bake-cake">How to Bake a Cake</a>

</h2>

<div class="node__content">

<div class="node__summary">

<p>Practical tips on cake baking that you can't find anywhere else</p>

</div>

<div class="node__links">

<ul class="links inline">

<li class="node__readmore">

<a href="/article/how-bake-cake" title="How to Bake a Cake">Read more</a>

</li>

</ul>

</div>

</div>

</article>

The <a> inside the <h2> contains descriptive text, which is necessary according to Google’s Link text recommendations and Mozilla’s Accessibility guidelines for link text.

But the Read More link has text that is not descriptive. Furthermore, it navigates to the same resource as the first <a>, making it an adjacent or redundant link. Without any other intervention, this markup causes repetition for keyboard and assistive technology users.

How do you make it better?

The WCAG has a recommendation for handling adjacent image and text links. You can use this same logic and wrap the entire Teaser content with a single link. But for this, you need TWIG.

Here’s an example of how to structure a TWIG template to create the link-wrapped Teaser.

node–article–teaser.html.twig:

Twig

<article{{ attributes.addClass(classes) }}>

<div{{ content_attributes.addClass('node__content') }}>

<a href={{ url }}>

<div class="wrapper">

<h2{{ title_attributes.addClass('node__title') }}>

{{ label }}

</h2>

{{ content.body }}

</div>

</a>

</div>

</article>

But while this solves the adjacent link issue, it creates another problem. When you combine this template with the Manage Display settings that insert Read More links, Drupal creates many, many duplicate links.

Instead of wrapping the Teaser content in a single anchor tag, anchors are inserted around every individual element (and sometimes around nothing). The markup is worse than before:

HTML

<div class="node__content">

<a href="/article/how-bake-cake"> </a>

<div class="wrapper">

<a href="/article/how-bake-cake">

<h2 class="node__title">How to Bake a Cake</h2>

</a>

<div class="field field--name--body field--type-text-with-summary">

<a href="/article/how-bake-cake">

<div class="field__label visually-hidden">Body</div>

</a>

<div class="field__item">

<a href="/article/how-bake-cake"></a>

<div class="trimmed">

<a href="/article/how-bake-cake">

<p>Practical tips on cake baking that you can’t find anywhere else</p>

</a>

<div class="more-link-x">

<a href="/article/how-bake-cake"></a>

<a href="/article/how-bake-cake" class="more-link-x" hreflang="en">More</a>

</div>

</div>

</div>

</div>

</div>

</div>

But if you remove Smart Trim’s Read more link or Core’s Links field, the Read More text is also gone.

HTML

<div class="node__content">

<a href="/article/how-bake-cake">

<div class="wrapper">

<h2 class="node__title">How to Bake a Cake</h2>

<div class="field field--name--body field--type-text-with-summary">

<div class="field__label visually-hidden">Body</div>

<div class="field__item">

<p>Practical tips on cake baking that you can’t find anywhere else</p>

</div>

</div>

</div>

</a>

</div>

Because we’ve already enlisted the help of TWIG to fix the adjacent link issue, it’s easy enough to also use TWIG to re-insert the words “read more.” This creates the appearance of a Read More link and wraps all of the Teaser contents in a single anchor tag.

Twig

<article{{ attributes.addClass(classes) }}>

<div{{ content_attributes.addClass('node__content') }}>

<a href={{ url }}>

<div class="wrapper">

<h2{{ title_attributes.addClass('node__title') }}>

{{ label }}

</h2>

{{ content.body }}

<span class="node__readmore">{{ 'Read More'|trans }}</span>

</div>

</a>

</div>

</article>

Resulting HTML:

HTML

<div class="node__content">

<a href="/article/how-bake-cake">

<div class="wrapper">

<h2 class="node__title">How to Bake a Cake</h2>

<div class="field field--name--body field--type-text-with-summary">

<div class="field__label visually-hidden">Body</div>

<div class="field__item">

<p>Practical tips on cake baking that you can’t find anywhere else</p>

</div>

</div>

<span class="node__readmore">Read more</span>

</div>

</a>

</div>

Do it all with TWIG

Of course, you can eliminate Core’s trimming feature, and the Smart Trim module, and do everything you need with TWIG.

Using the same TWIG template, the Body field’s value can be truncated at a particular character length, split into words and appended with “read more” text.

Something like the following should do the trick in most cases.

node–article–teaser.html.twig:

Twig

<article{{ attributes.addClass(classes) }}>

<div{{ content_attributes.addClass('node__content') }}>

<a href={{ url }}>

<div class="wrapper">

<h2{{ title_attributes.addClass('node__title') }}>

{{ label }}

</h2>

{{ node.body.0.value|striptags|slice(0, 175)|split(' ')|slice(0, -1)|join(' ') ~ '...' }}

<span class="node__readmore">{{ 'Read More'|trans }}</span>

</div>

</a>

</div>

</article>

Final Thoughts

Today’s internet doesn’t need a prompt on each Teaser or Card to indicate that there is, in fact, more to read. The Read More Teaser feels like a relic from bygone days when, perhaps, it wasn’t obvious that a Teaser was only a summary.

But until we, the creators of websites, collectively decide to abandon the Read More Teaser pattern, developers will have to keep building them. And we should make them as accessible as we can in the process.

Although Drupal provides simple methods to implement this pattern, it’s the templating system that really shines here and solves the accessibility problems.

Are you a developer looking for a new opportunity? Join our team.

THE BRIEF

Never Stopping, Always Evolving

Leica Geosystems was founded on cutting-edge technology and continues to push the envelope with their revolutionary products. Leica Geosystems was founded by Heinrich Wild and made its first rangefinder in 1921. Fast forward to the 21st century, and Leica Geosystems is the leading manufacturer of precision laser technology used for measurements in architecture, construction, historic preservation, and DIY home remodeling projects.

Oomph and Leica collaborated on an initial project in 2014 and have completed multiple projects since. We transitioned the site into a brand new codebase with Drupal 8. With this conversion, Oomph smoothed out the Leica team’s pain points related to a multisite architecture. We created a tightly integrated single site that can still serve multiple countries, languages, and currencies.

THE CHALLENGE

Feeling the Pain-points with Multisite

Leica’s e-commerce store is active in multiple countries and languages. Managing content in a Drupal multisite environment meant managing multiple sites. Product, content, and price changes were difficult. It was Oomph’s challenge to make content and product management easier for the Leica team as well as support the ability to create new country sites on demand. Leica’s new e-commerce site needed to support:

MULTIPLE COUNTRIES AND A GLOBAL OPTION

SIX LANGUAGES

MANY 3RD-PARTY INTEGRATIONS

The pain points of the previous Multisite architecture were that each country was a silo:

- No Single Sign On (SSO): Multiple admin log-ins to remember

- Repetitive updates: Running Drupal’s update script on every site and testing was a lengthy process

- Multiple stores: Multiple product lists, product features, and prices

- Multiple sites to translate: each site was sent individually to be translated into one language

THE APPROACH

Creating a Singularity with Drupal 8, Domain Access, & Drupal Commerce

A move to Drupal 8 in combination with some smart choices in module support and customization simplified many aspects of the Leica team’s workflow, including:

- Configuration management: Drupal 8’s introduction of configuration management in core means that point-and-click admin configuration can get exported from one environment and imported into another, syncing multiple environments and saving configuration in our code repository

- One Database to Rule Them All: Admins have a single site to log into and do their work, and developers have one site to update, patch, and configure

- One Commerce Install, Multiple stores: There is one Drupal Commerce 2.x install with multiple stores with one set of products. Each product has the ability to be assigned to multiple stores, and price lists per country control product pricing

- One Page in Multiple Countries and Multiple Languages: The new single site model gives a piece of content one place to live, while authors can control which countries the content is available and the same content is translated into all the languages available once.

- Future proof: With a smooth upgrade path into Drupal 9 in 2020, the Drupal 8 site gives Leica more longevity in the Drupal ecosystem

LEARN VS. SHOP

Supporting Visitor Intention with Two Different Modes

While the technical challenges were being worked out, the user experience and design had to reflect a cutting-edge company. With the launch of their revolutionary product, the BLK 360, in 2018, Leica positioned itself as the Apple of the geospatial measurement community — sleek, cool, cutting-edge and easy to use. While many companies want to look as good as Apple, few of them actually have the content and product to back it up.

The navigation for the site went through many rounds of feedback and testing before deciding on something radically simple — Learn or Shop. A customer on the website is either in an exploratory state of mind — browsing, comparing, reviewing pricing and specifications — or they are ready to buy. We made it very clear which part of the website was for which.

This allowed us to talk directly to the customer in two very different ways. On the Learn side, the pages educate and convince. They give the customer information about the product, reviews, articles, sample data files, and the like. The content is big, sleek, and leverages video and other embedded content, like VR, to educate.

On the Shop side the pages are unapologetically transactional. Give the visitor the right information to support a purchase, clearly deliver specs and options like software and warranties, without any marketing. We could assume the customer was here to purchase, not to be convinced, so the page content could concentrate on order completion. The entire checkout process was simplified as much as possible to reduce friction. Buying habits and patterns of their user base over the past few site iterations were studied to inform our choices about where to simplify and where to offer options.

THE RESULTS

More Nimble Together

The willingness of the Drupal community to support the needs of this project cannot be overlooked, either. Oomph has been able to leverage our team’s commitment to open source contributions to get other developers to add features to the modules they support. Without the give and take of the community and our commitment to give back, many modifications and customizations for this project would have been much more difficult. The team at Centarro, maintainers of the Commerce module, were fantastic to work with and we thank them.

We look forward to continuing to support Leica Geosystems and their product line worldwide. With a smooth upgrade path to Drupal 9 in 2020, the site is ready for the next big upgrade.

Is your digital platform still on Drupal 7? By now, hopefully, you’ve heard that this revered content management system is approaching its end of life. In November 2023, official Drupal 7 support from the Drupal community will end, including bug fixes, critical security updates, and other enhancements.

If you’re not already planning for a transition from Drupal 7, it’s time to start.

With nearly 12 million websites currently hacked or infected, Drupal 7’s end of life carries significant security implications for your platform. In addition, any plug-ins or modules that power your site won’t be supported, and site maintenance will depend entirely on your internal resources.

Let’s take a brief look at your options for transitioning off Drupal 7, along with five crucial planning considerations.

What Are Your Options?

In a nutshell, you have two choices: upgrade to Drupal 9, or migrate to a completely new CMS.

With Drupal 9’s advanced features and functionalities, migrating from Drupal 7 to 9 involves much more than applying an update to your existing platform. You’ll need to migrate all of your data to a brand-new Drupal 9 site, with a whole new theme system and platform requirements.

Drupal 9 also requires a different developer skill set. If your developers have been on Drupal 7 for a long time, you’ll need to factor a learning curve into your schedule and budget, not only for the migration but also for ongoing development work and maintenance after the upgrade.

As an alternative, you could take the opportunity to build a new platform with a completely different CMS. This might make sense if you’ve experienced a lot of pain points with Drupal 7, although it’s worth investigating whether those problems have been addressed in Drupal 9.

Drupal 10

What of Drupal 10, you ask? Drupal 10 is slated to be released in December 2022, but it will take some time for community contributed modules to be updated to support the new version. Once ready, updating from Drupal 9 to Drupal 10 should be a breeze.

Preparing for the Transition

Whether you decide to migrate to Drupal 9 or a new CMS, your planning should include the following five elements:

Content Audit

Do a thorough content inventory and audit, examine user analytics, and revisit your content strategy, so you can identify which content is adding real value to your business (and which isn’t).

Some questions to ask:

- Does your current content support your strategy and business goals?

- How much of the content is still relevant for your platform?

- Does the content effectively engage your target audience?

Another thing to consider is your overall content architecture: Is it a Frankenstein that needs refinement or revision? Hold that thought; in just a bit, we’ll cover some factors in choosing a CMS.

Design Evaluation

As digital experiences have evolved, so have user expectations. Chances are, your Drupal 7 site is starting to show its age. Be sure to budget time for design effort, even if you would prefer to keep your current design.

Drupal 7 site themes can’t be moved to a new CMS, or a different version of Drupal, without a lot of development work. Even if you want to keep the existing design, you’ll have to retheme the entire site, because you can’t apply your old theme to a new backend.

You’ll also want to consider the impact of a new design and architecture on your existing content, as there’s a good chance that even using an existing theme will require some content development.

Integrations

What integrations does your current site have, or need? How relevant and secure are your existing features and modules? Be sure to identify any modules that have been customized or are not yet compatible with Drupal 9, as they’ll likely require development work if you want to keep them.

Are your current vendors working well for you, or is it time to try something new? There are more microservices available than ever, offering specialized services that provide immense value with low development costs. Plus, a lot of functionalities from contributed modules in D7 are now a part of the D9 core.

CMS Selection & Architecture

Building the next iteration of your platform requires more than just CMS selection. It’s about defining an architecture that balances cost with flexibility. Did your Drupal 7 site feel rigid and inflexible? What features do you wish you had – more layout flexibility, different integrations, more workflow tools, better reporting, a faster user interface?

For content being brought forward, will migrating be difficult? Can it be automated? Should it be? If you’re not upgrading to Drupal 9, you could take the opportunity to switch to a headless CMS product, where the content repository is independent of the platform’s front end. In that case, you’d likely be paying for a product with monthly fees but no need for maintenance. On the flipside, many headless CMS platforms (like Contentful, for example) don’t allow for customization.

Budget Considerations

There are three major areas to consider in planning your budget: hosting costs, feature enhancements, and ongoing maintenance and support. All of these are affected by the size and scope of your migration and the nature of your internal development team.

Key things to consider:

- Will the newly proposed architecture be supported by existing hosting? Will you need one vendor or several (if you’re using microservices)?

- If you take advantage of low or no-cost options for hosting, you could put the cost savings toward microservices for feature enhancements.

- Given Drupal 9’s platform requirements, you’ll need to factor in the development costs of upgrading your platform infrastructure.

- Will your new platform need regular updates? How often might you add feature enhancements and performance optimizations? And do you have an internal dev team to handle the work?

Look on the Bright Side

Whatever path you choose, transitioning from Drupal 7 to a new CMS is no small task. But there’s a bright side, if you view it as an opportunity. A lot has changed on the web since Drupal 7 was launched! This is a great time to explore how you can update and enhance your platform user experience.

Re-platforming is a complex process, and it’s one that we love guiding clients through. By partnering with an experienced Drupal development firm, most migrations can be planned and implemented more quickly, with better outcomes for your brand and your audience.

From organizing the project, to vendor and service selection, to budget management, we’ve helped a number of organizations bring their platforms into the modern web. To see an example, check out this design update for the American Veterinary Medical Association.

Need help figuring out the best next step for your Drupal 7 platform? Contact us today for a free consultation.

As a back-end developer, I’m used to building stuff that people interact with every day but never actually see. I create that layer beneath the surface that makes things work the way people expect them to — and I’m the one who gets called in when something goes wrong. Either way, I spend a lot of time unraveling puzzles and reimagining solutions, forever pushing the limits of what software can do.

I make things work; that’s what I love about my job. It’s also the reason why I like being part of the Open Source Software (OSS) community. OSS offers nearly infinite opportunities to solve problems or build things that didn’t exist before.

Plus, as an open source contributor, by writing one little fix you could be helping hundreds or thousands of digital platforms.

Why Open Source Matters to Me

I first got involved with OSS back in 2007, when I used Drupal CMS for a client project. I spent a few years getting comfortable with the software and kind of dipping a toe in the community aspect. After a while, I’d been consuming Drupal so much that I started to feel bad about using all that free software and not giving anything back.

Then I had a project come along that needed a custom feature for Drupal that didn’t exist yet in the open source space. I wrote the module and gave it back to the community, working through other open source contributors who needed it too. That’s when I discovered how rewarding it is when you build something and people actually use it.

When you’re working on a project and you find a problem, you could just fix it and move on. But when you say, “Hey, somebody else might find this useful,” and you take that extra 30 minutes to give the code back to the community, you make everybody better.

I love the feeling that giving back gives you, especially when you fix something that thousands of other people use.

From Dipping a Toe to the Deep End

For me, being an OSS contributor comes with a sense of responsibility. It’s rewarding when you fix an issue for other developers, but it also makes you not want to screw up something that thousands of other sites report using. So I’m always mindful of releasing quality code. Maybe that’s also why I became a maintainer.

Years ago, I was using a contributed theme that someone else had written as a starting point for a lot of the projects I worked on. Then the sole maintainer passed away, and a lot of us still wanted to use the theme. So a coworker and I offered to maintain it — I couldn’t just walk away from something I’d been benefiting from for so long.

Today, I regularly submit code to open source communities, and I’m a maintainer of nine different open source modules. How did that happen, you ask? Well… sometimes I’ll recommend that a client uses an unmaintained module in their application, and then, you know, somebody’s got to take care of it.

What can I say? I also feed the stray cats in my neighborhood.

I Get to Do This for Work?!

Problem-solving is the best part of my job. And with OSS, you’re always going to have a problem to solve, or a bug to fix, or a new feature to build every time someone says, “You know, it would be great if this module did this.” That’s when my teammates and I roll up our sleeves and say, “Okay, how are we going to make this work?” When we find a solution, we give it back.

Fortunately, I work at an agency that actively encourages us to make open source contributions. We recognize the value we get from OSS, and that’s why we go the extra mile to support the community. I can build OSS fixes while I’m working on client projects, or take professional growth days to focus on open source work.

I don’t know if that’s true of all agencies, but I’m lucky enough to work somewhere that believes in making an impact.

Want to come build things with us? Check out our open positions.

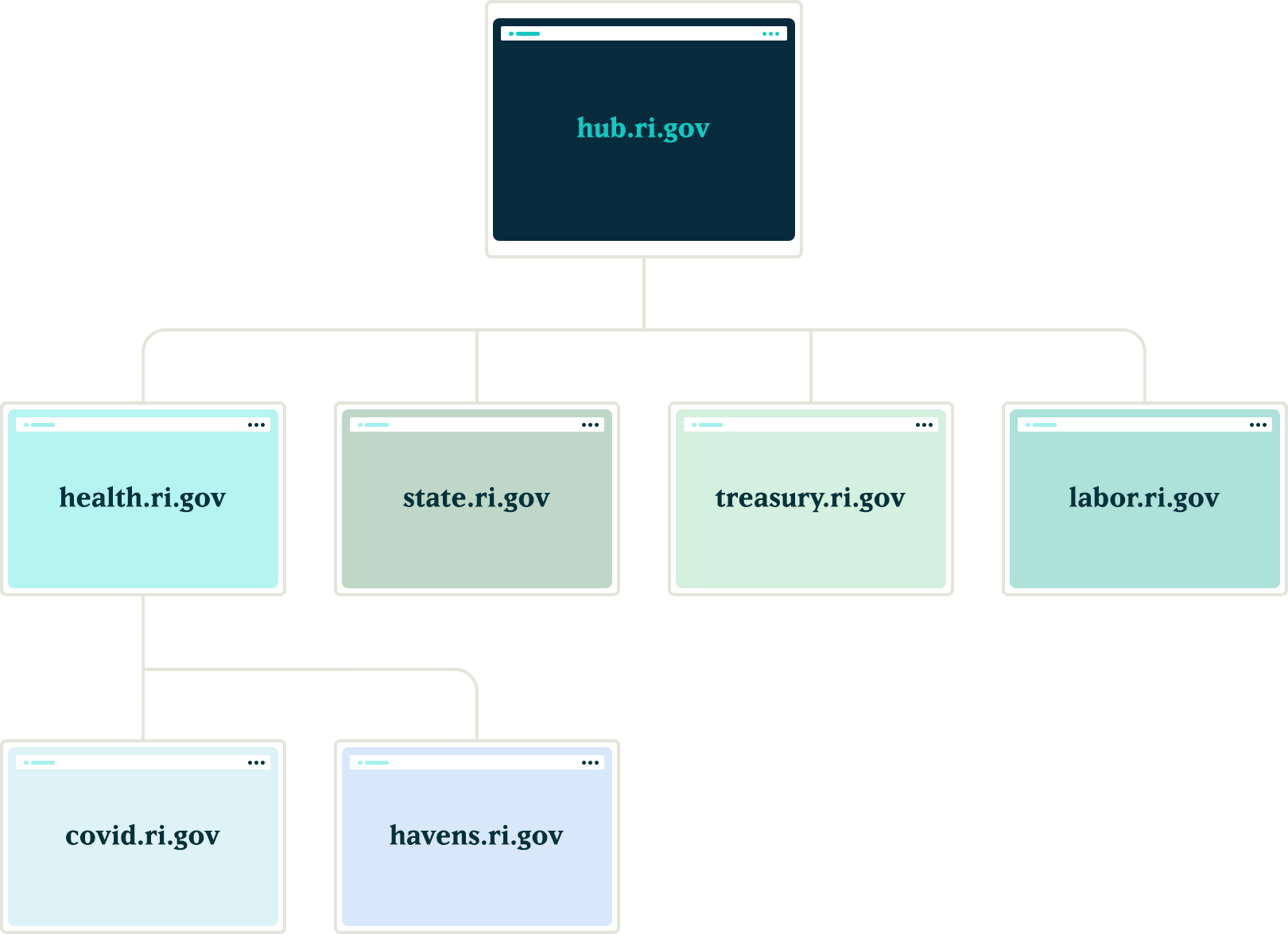

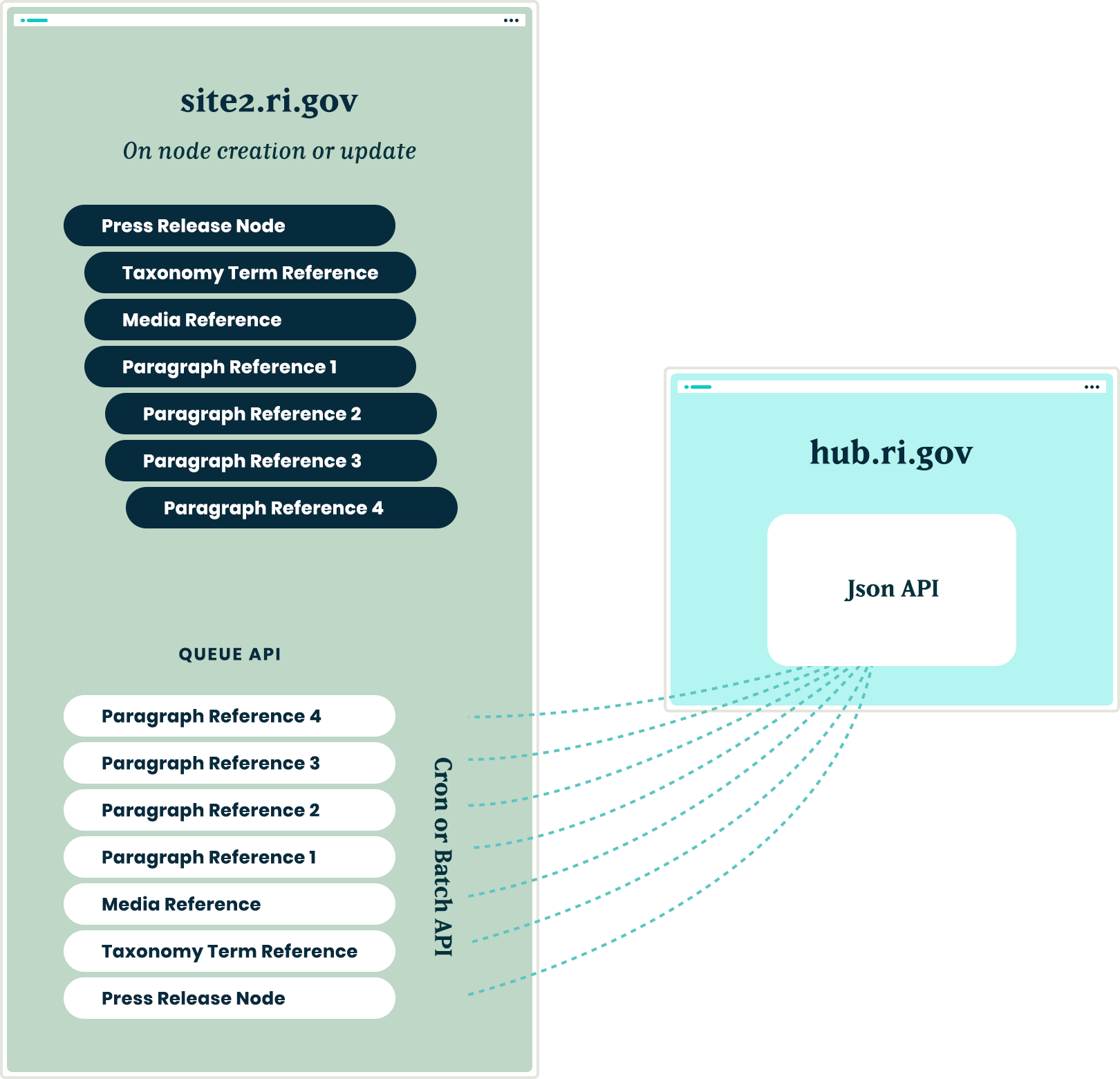

How we leveraged Drupal’s native API’s to push notifications to the many department websites for the State.RI.gov is a custom Drupal distribution that was built with the sole purpose of running hundreds of department websites for the state of Rhode Island. The platform leverages a design system for flexible page building, custom authoring permissions, and a series of custom tools to make authoring and distributing content across multiple sites more efficient.

Come work with us at Oomph!

VIEW OPEN POSITIONS

The Challenge

The platform had many business requirements, and one stated that a global notification needed to be published to all department sites in near real-time. These notifications would communicate important department information on all related sites. Further, these notifications needed to be ingested by the individual websites as local content to enable indexing them for search.

The hierarchy of the departments and their sites added a layer of complexity to this requirement. A department needs to create notifications that broadcast only to subsidiary sites, not the entire network. For example, the Department of Health might need to create a health department specific notification that would get pushed to the Covid site, the RIHavens site, and the RIDelivers sites — but not to an unrelated department, like DEM.

Exploration

Aggregator:

Our first idea was to utilize the built in Drupal aggregator module and pull notifications from the hub. A proof of concept proved that while it worked well for pulling content from the hub site, it had a few problems:

- It relied heavily on the local site’s cron job to pull updates, which led to timing issues in getting the content — it was not in near real-time. Due to server limitations, we could not run cron as often as would be necessary

- Another issue with this approach was that we would need to maintain two entity types, one for global notifications and a second for local site notifications. Keeping local and global notifications as the same entity allowed for easier maintenance for this subsystem.

Feeds:

Another thought was to utilize the Feeds module to pull content from the hub into the local sites. This was a better solution than the aggregator because the nodes would be created locally and could be indexed for local searching. Unfortunately, feeds relied on cron as well.

Our Solution

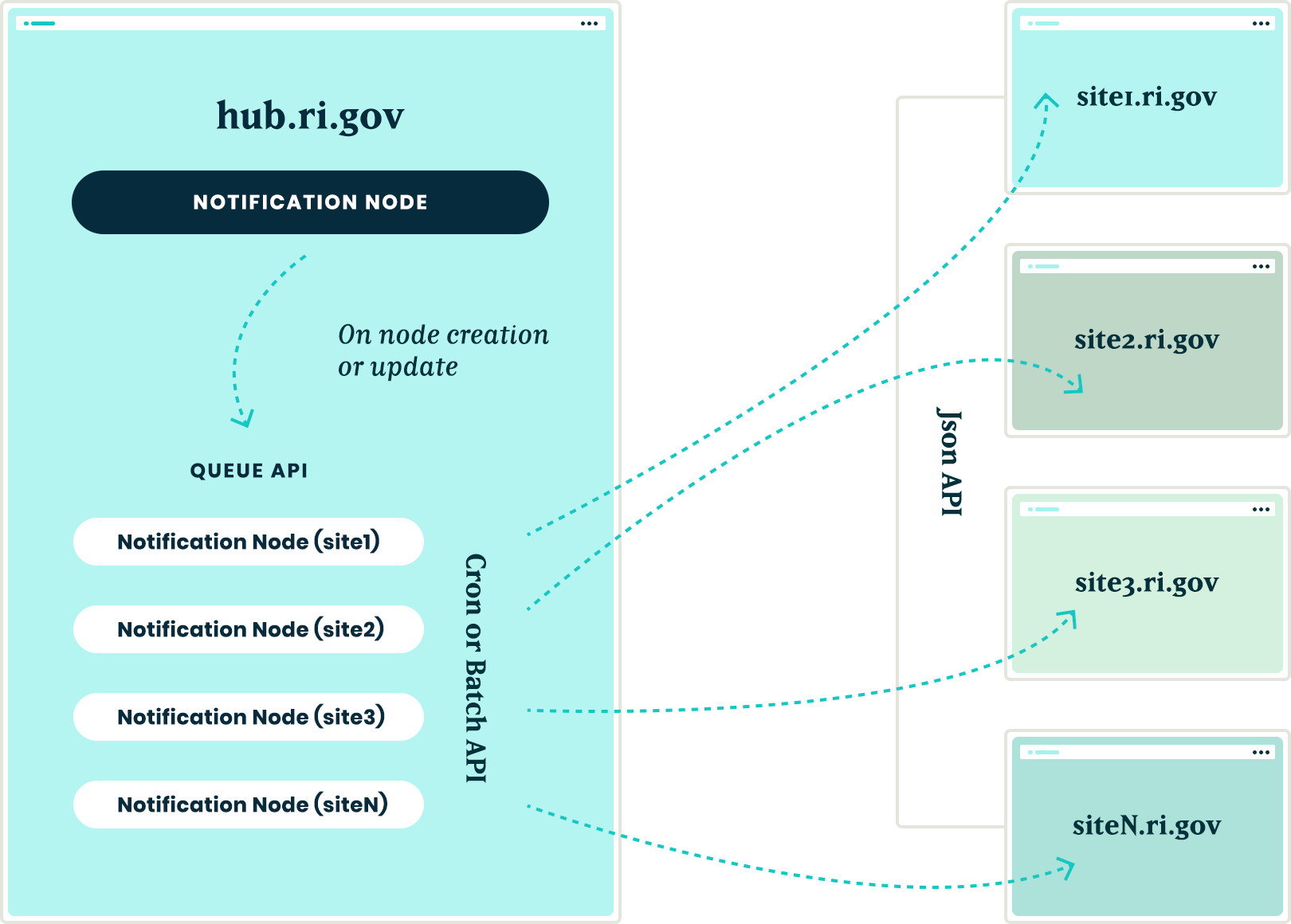

JSON API

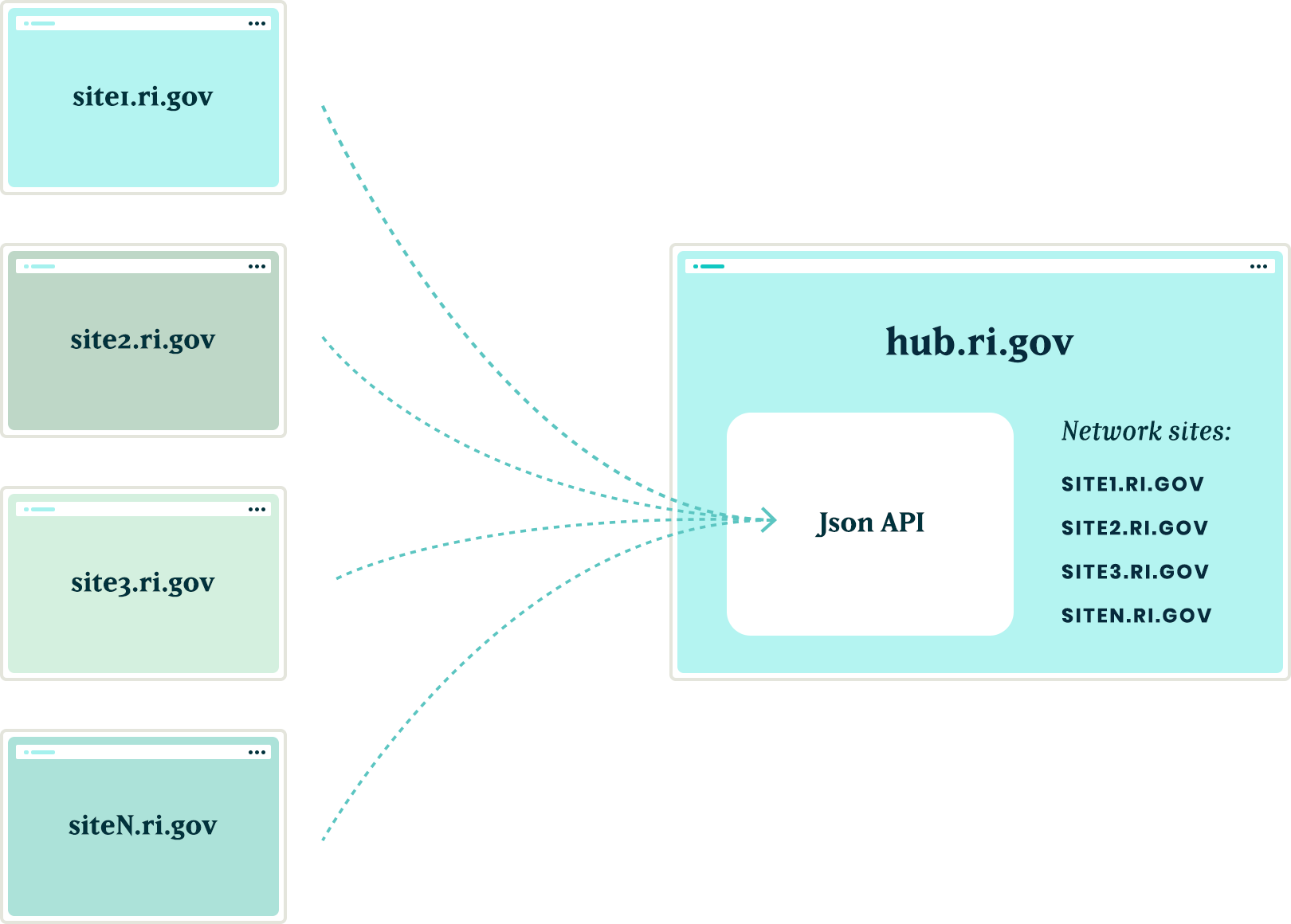

We created a suite of custom modules that centered around moving data between the network sites using Drupal’s JSON API. The API was used to register new sites to the main hub when they came online. It was also used to pass content entities from the main hub down to all sites within the network and from the network sites back to the hub.

Notifications

In order to share content between all of the sites, we needed to ensure that the data structure was identical on all sites in the network. We started by creating a new notification content type that had a title field, a body field, and a boolean checkbox indicating whether the notification should be considered global. Then, we packaged the configuration for this content type using the Features module.

By requiring our new notification feature module in the installation profile, we ensured that all sites would have the required data structure whenever a new site was created. Features also allowed us to ensure that any changes to the notification data model could be applied to all sites in the future, maintaining the consistency we needed.

Network Domain Entity

In order for the main hub, ri.gov, to communicate with all sites in the network, we needed a way to know what Drupal sites existed. To do this, we created a custom configuration entity that stored the URL of sites within the network. Using this domain entity, we were able to query all known sites and passed the global notification nodes created on ri.gov to each known site using the JSON API.

Queue API:

To ensure that the notification nodes were posted to all the sites without timeouts, we decided to utilize Drupal’s Queue API. Once the notification content was created on the ri.gov hub, we queried the known domain entities and created a queue item that would use cron to actually post the notification node to each site’s JSON API endpoint. We decided to use cron in this instance to give us some assurance that a post to many websites wouldn’t timeout and fail.

Batch API

To allow for time sensitive notifications to be pushed immediately, we created a custom batch operation that reads all of the queued notifications and pushes them out one at a time. If any errors are encountered, the notification is re-queued at the end of the stack and the process continues until all notifications have been posted to the network sites.

New site registrations

In order to ensure that new sites receive notifications from the hub, we needed a site registration process. Whenever a new site is spun up, a custom module is installed that calls out to the hub using JSON API and registers itself by creating a new network domain entity with it’s endpoint URL. This allows the hub to know of the new site and can push any new notifications to this site in the future.

The installation process will also query the hub for any existing notifications and, using the JSON API, get a list of all notification nodes from the hub to add them to it’s local queue for creation. Then, the local site uses cron to query the hub and get the details of each notification node to create it locally. This ensured that when a new site comes online, it will have an up to date list of all the important notifications from the hub.

Authentication

Passing this data between sites is one challenge, but doing it securely adds another layer of complexity. All of the requests going between the sites are authenticating with each other using the Simple Oauth module. When a new site is created, an installation process creates a dedicated user in the local database that will own all notification nodes created with the syndication process. The installation process also creates the appropriate Simple OAuth consumers which allows the authenticated connections to be made between the sites.

Department sites

Once all of the groundwork was in place, with minimal effort, we were able to allow for department sites to act as hubs for their own department sites. Thus, the Department of Health can create notifications that only go to subsidiary sites, keeping them separate from adjacent departments.

Translations

The entire process also works with translations. After a notification is created in the default language, it gets queued and sent to the subsidiary sites. Then, a content author can create a translation of that same node and the translation will get queued and posted to the network of sites in the same manner as the original. All content and translations can be managed at the hub site, which will trickle down to the subsidiary sites.

Moving in the opposite direction

With all of the authorization, queues, batches, and the API’s in place, the next challenge was making this entire system work with a Press Release content type. This provided two new challenges that we needed to overcome:

- Instead of moving content from the top down, we needed to move from the bottom up. Press release nodes get created on the affiliate sites and would need to be replicated on the hub site.

- Press release nodes were more complex than the notification nodes. These content types included media references, taxonomy term references and toughest of all, paragraph references.

Solving the first challenge was pretty simple – we were able to reuse the custom publishing module and instructed the queue API to send the press release nodes to the hub sites.

Getting this working with a complex entity like the press release node meant that we needed to not only push the press release node, but we also needed to push all entities that the initial node referenced. In order for it all to work, the entities needed to be created in reverse order.

Once a press release node was created or updated, we used the EntityInterface referencedEntities() method to recursively drill into all of the entities that were referenced by the press release node. In some cases, this meant getting paragraph entities that were nested two, three, even four levels deep inside of other paragraphs. Once we reached the bottom of the referenced entity pile, we began queuing those entities from the bottom up. So, the paragraph that was nested four levels deep was the first to get sent and the actual node was the last to get sent

Are you a developer looking to grow your skills? Join our team.

Conclusion

Drupal’s powerful suite of API’s gave us all the tools necessary to come up with a platform that will allow the State of Rhode Island to easily keep their citizens informed of important information, while allowing their editing team the ease of a create once and publish everywhere workflow.

Each spring, students at the Rhode Island School of Design (RISD) exhibit their undergraduate and master’s thesis projects at the RISD Museum. Due to Covid-19, they were unable to prepare and stage physical exhibits in the spring of 2020.

Not to be deterred, the school and museum agreed to host the student work online as fully digital exhibits. The Museum previously partnered with Oomph to build out the award-winning “Raid the Icebox” online publication using Drupal and Layout Builder, so it felt familiar and natural to build out similar features for university student projects.

The necessary work involved extending the existing online gallery features to hundreds of additional artists, so we needed to build a system that could scale. Along the way, while we were at it, we were tasked with adding additional features to the platform. Why not have lofty goals?

The Timeline

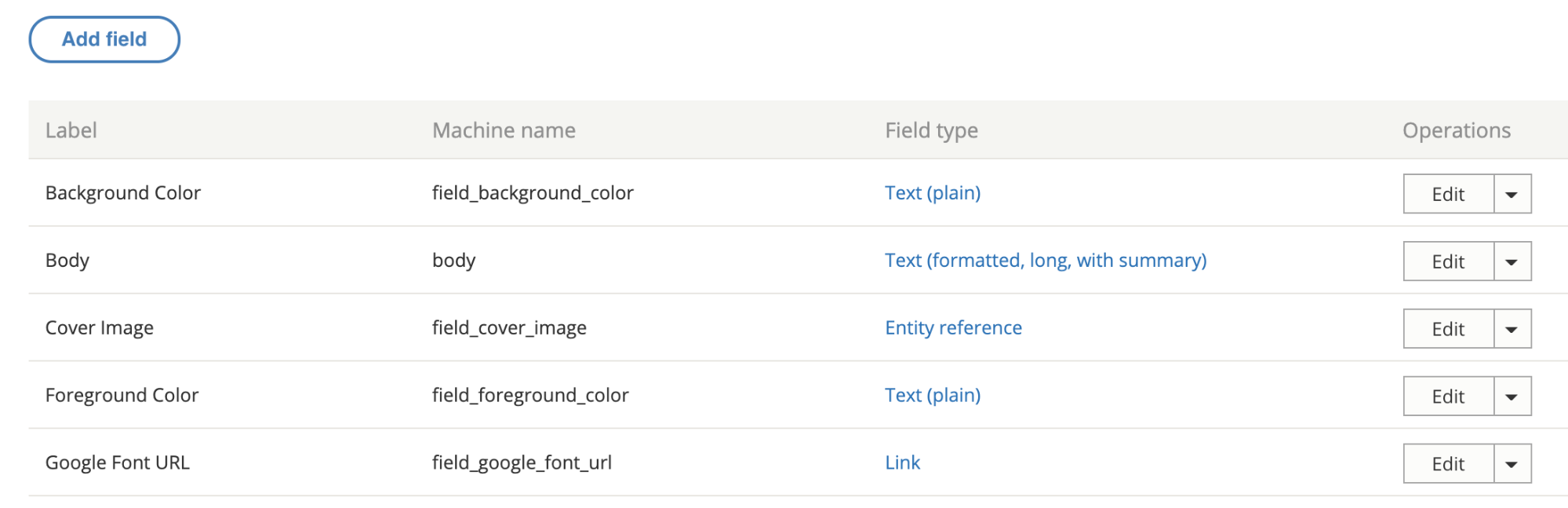

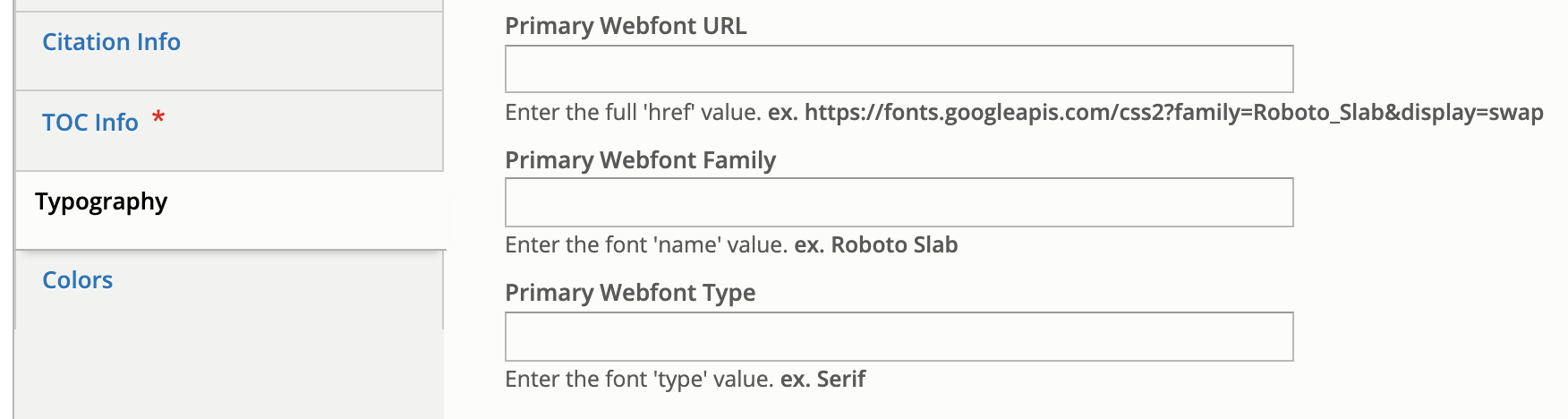

We kicked off the first stage of the project on April 20, 2020, aiming for a two-staged release. Most of the new code would need to be deployed by the last week of May, with the additional features released two weeks later. The basic infrastructure would have to be established along with the custom permissions for artists, editors, and museum administrators. A second stage would add refinement to font selection and color palette.

What the Artists Needed

- A platform for routine editor tasks such as editing content, uploading media, altering the layout, and resources to perform many tasks outside the usual scope of content editors.

- The ability to add primary, secondary, and system webfonts to custom content types as well as their associated layout builder templates.

- A custom color palette whose colors were chosen by an admin user. This kind of style addition had to be available through Layout Builder for new publication nodes.

- A few trusted student authors also needed the ability to add JavaScript directly1 into page content. This was an intimidating requirement from a security standpoint, but the end results were highly engaging.

What the Staff Needed

- A robust set of permissions to enable multiple departments to have ownership and administrative controls over their particular domains, including:

- Bulk upload of new users in anticipation of needing to add hundreds of students.

- Node clone functionality (the ability to duplicate pages) to speed up time creating new pieces of content.

- Custom permissions for trusted editors for all content in a particular section.

- Enabling those editors to grant artists permission to view, edit, and access Layout Builder for a particular node.

A Deeper Dive

Overall Approach

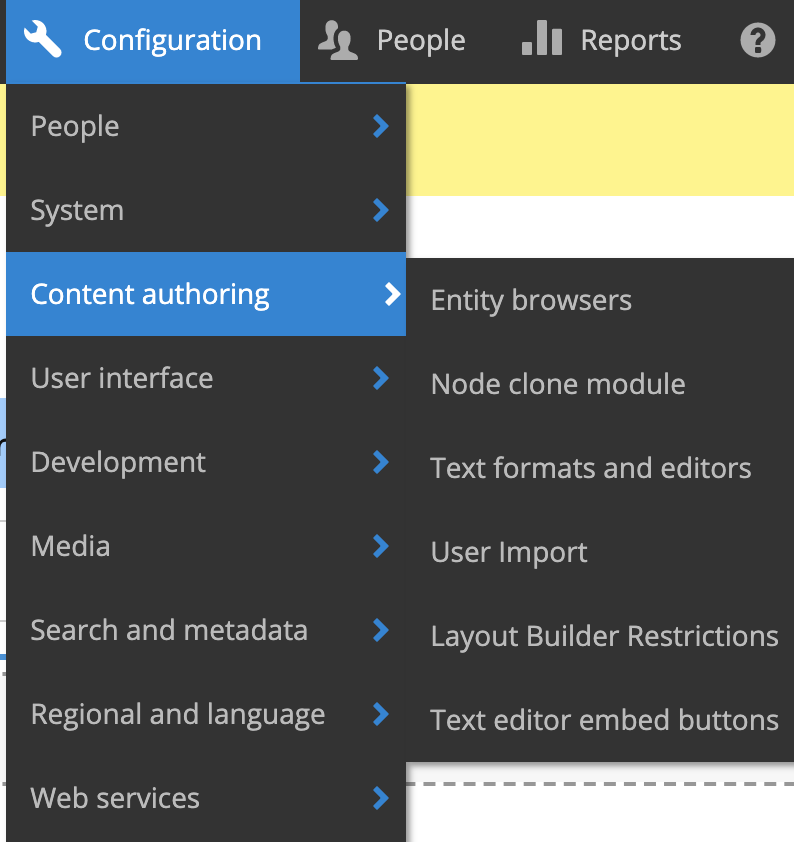

We leveraged Drupal to build out our new features “the Drupal way.” The Node Clone and Bulk User Import modules could be installed and enabled with our Composer workflow and used right out of the box to offer additional powerful functionality. Now a user with the Editor role could craft a meticulously designed template and then clone it for other school departments. A user with the Web Administrator role would not have to add users one-by-one through the user interface but could import large numbers of new users — while specifying the user role — with CSV files.

We added the new custom fields, content types, user roles, and text formats manually through Drupal’s UI. We could later use preprocess functions in the theme and Twig templates to render content as needed.

There were a lot of fields needed, covering different aspects of the typography. Here are a few:RISD

Since it was a Drupal 8 project, we made extensive use of config sync to export and import config files. The front-end and back-end developers could work independently until it was time to merge branches for testing. Then we were able to seamlessly push changes to higher environments as part of our deploy process.

Note: As a rule, we recommend setting config to read-only, especially on projects that have many web admin users.

Custom Webfont Example

With those new fields in place, a user sees text input fields on the node edit view of each publication to enter in custom font URLs or names.

In terms of rendering to the page when someone is viewing the node, this requires both a preprocess hook in the [custom_theme].theme file and changes to the Twig template.

Note: Please be aware that allowing hundreds of users to input free text is not an ideal situation, and that security measures should be taken when processing free text.

Here is what the preprocess hook looks like for the mytheme.theme file:

use Drupal\node\Entity\Node;

use Drupal\taxonomy\TermStorage;

/**

* Implements hook_preprocess_HOOK().

*/

function mytheme_preprocess_html(array &$variables) {

$routeMatch = Drupal::routeMatch();

$node = $routeMatch->getParameter('node');

if ($node instanceof Node && $node->getType() === 'publication’) {

if (isset($node->field_primary_webfont_url) && !$node->field_primary_webfont_url->isEmpty()) {

$variables['primary_webfont_url'] = $node->field_primary_webfont_url->value;

$variables['primary_webfont_family'] = $node->field_primary_webfont_family->value;

$variables['primary_webfont_type'] = $node->field_primary_webfont_type->value;

}

PHP

Then in the Twig template, which is at this path: myproject/docroot/themes/custom/mytheme/templates/layout/html.html.twig

<!DOCTYPE html>

<html{{ html_attributes }}>

<head>

<title>{{ head_title }}</title>

{% if primary_webfont_url|length %}

<link rel="stylesheet prefetch" media="screen" href="{{ primary_webfont_url }}">

<style type="text/css">

:root {

--ff__serif: '{{ primary_webfont_family }}', {{ primary_webfont_type }};

}

</style>

{% endif %}

{% if secondary_webfont_url|length %}

<link rel="stylesheet prefetch" media="screen" href="{{ secondary_webfont_url }}">

<style type="text/css">

:root {

--ff__sans: '{{ secondary_webfont_family }}', {{ secondary_webfont_type }};

}

</style>

{% endif %}

{% if background_color_override|length and foreground_color_override|length %}

<style type="text/css">

:root {

--c__primary--bg: {{ background_color_override }};

--c__primary--fg: {{ foreground_color_override }};

}

</style>

{% endif %}

</head>

<body{{ attributes }}>

{{ page_top }}

{{ page }}

{{ page_bottom }}

</body>

</html>

HTML

Finally, here is what someone viewing a page would see:

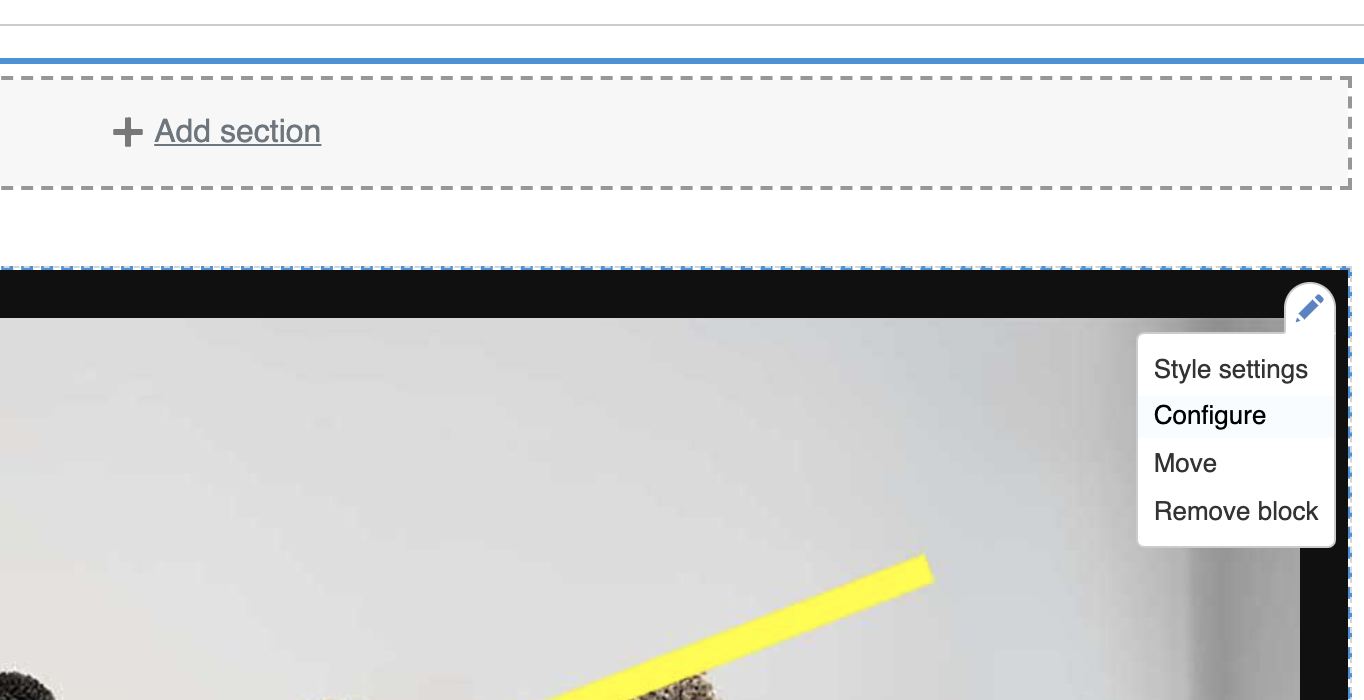

Most of the creative work for each piece of content happened behind the scenes in Layout Builder. Each block or section could be configured individually, which gave the artists a lot of ability to customize their online territories to the fullest extent possible.

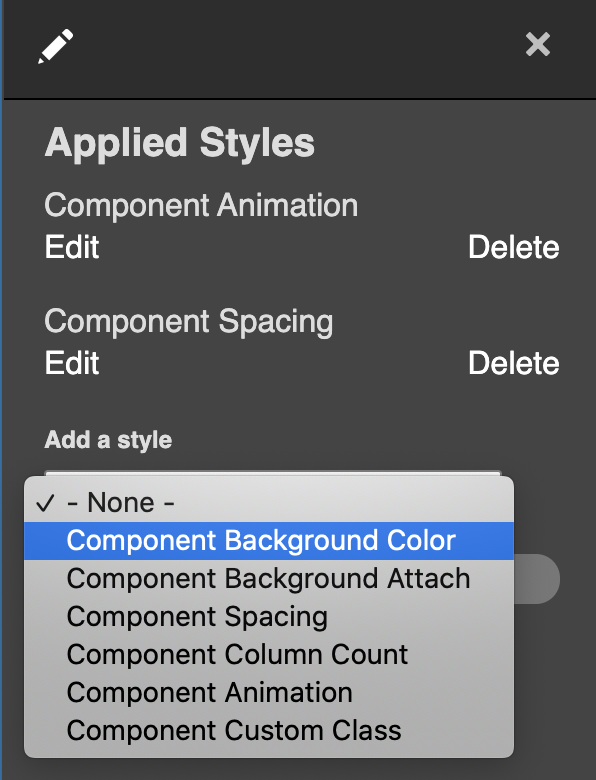

In addition to being able to choose a foreground or background color on the node level, an artist or editor can choose to change the color of just one block in Layout Builder simply by clicking on the “Style Settings” link.

Another inline-editing window will pop up with additional options. In the “Add a style” dropdown menu, the artist or editor can select “Component Background Color,” click “Add Styles,” and choose from one of the colors in the palette to be applied to the block.

Along with the preprocessing described in the previous section, we extended Layout Builder’s features with a custom module to alter layouts. The plugin class lives at: docroot/modules/custom/my_module/Plugin/Layout/LayoutBase.php

<?php

namespace Drupal\my_module\Plugin\Layout;

use Drupal\Core\Form\FormStateInterface;

use Drupal\Core\Layout\LayoutDefault;

use Drupal\Core\Plugin\PluginFormInterface;

/**

* Provides a layout base for custom layouts.

*/

abstract class LayoutBase extends LayoutDefault implements PluginFormInterface {

public const NO_BACKGROUND_COLOR = 0;

public function build(array $regions): array {

$build = parent::build($regions);

$backgroundColor = $this->configuration['background_color'];

if ($backgroundColor) {

$build['#attributes']['class'][] = 'rpp__bg-color--' . $backgroundColor;

}

return $build;

}

public function defaultConfiguration(): array {

return [

'background_color' => NO_BACKGROUND_COLOR,

'id' => NULL,

'background_color_override' => NULL,

];

}

public function buildConfigurationForm(array $form, FormStateInterface $form_state): array {

$form['background'] = [

'#type' => 'details',

'#title' => $this->t('Background'),

'#open' => TRUE,

'#weight' => 20,

];

$form['background']['background_color'] = [

'#type' => 'radios',

'#default_value' => $this->configuration['background_color'],

];

$form['background']['overrides'] = [

'#type' => 'fieldset',

'#title' => $this->t('Overrides'),

];

$form['background']['overrides']['background_color_override'] = [

'#type' => 'textfield',

'#title' => $this->t('Background Color'),

'#default_value' => $this->configuration['background_color_override'],

'#attributes' => [

'placeholder' => '#000000',

],

];

return $form;

}

public function submitConfigurationForm(array &$form, FormStateInterface $form_state) {

$values = $form_state->getValues();

$this->configuration['background_color'] = $values['background']['background_color'];

$this->configuration['id'] = $values['extra']['attributes']['id'];

$this->configuration['background_color_override'] = $values['background']['overrides']['background_color_override'];

}

}

PHP

The Background Color form gets inserted into the Layout Builder form, and the user’s color selections get submitted and saved to configuration in the submitConfigurationForm() method.

The custom layout needs to be registered, so it should be added in a file called: my_module.layouts.yml and looks like:

layout_base:

label: 'New Layout'

category: 'Custom Layouts'

template: templates/layout-base

default_region: main

regions:

main:

label: Main content

PHP

Now this custom layout with color overrides and whatever else you want to add will be available for users with the appropriate permissions to edit content in Layout Builder.

Conclusion

Jeremy Radtke, Assistant Director of Digital Initiatives at the RISD Museum, said in a recent presentation to the Museum Publishing Digital Interest Group that RISD sees the museum as a site of creative collaboration. In terms of the end-of-year digital showcase, this is demonstrated in the emphasis on student artists having a high degree of creative control over their projects. They were able to radically alter the existing layout templates offered to them, changing fonts, colors, and other elements of the theme. They were able to configure blocks to add static images, animated gifs, and other media files such as short films to stretch the limits of the digital space.