Search and SEO are evolving rapidly in the wake of new AI options. Many of our clients are concerned about continuing to receive a return on their SEO investment. They worry about putting effort into the right places. And they worry about how to prepare for a drastic shift in the landscape, should it come.

The speed of evolution has made these questions difficult to answer with authority. But we conducted research, asked some experts, and have some theories that put these fears into context. Hopefully, they can help your organization navigate these uncharted waters.

Do AI Overviews reduce click-through rates?

In 2024, Google introduced AI-generated answers to queries in its search results. These “AI Overviews” are more likely to appear when a visitor phrases their search query like a question, using “what,” “how,” or “why” language. These overviews provide citations to their sources and a right sidebar (on laptops) with other references. Some are calling the traffic these overviews generate “zero-click” searches.

While the answer is yes, click-through rates have reduced by as much as 10%, others argue that most websites will be unaffected. For one, Google has scaled back their AI Overviews to only 1.28% of its billions of daily searches. This will likely increase now that AI has become less likely to provide incorrect answers, but the misconception that AI Overviews are everywhere is overblown.

Further, the same article goes on to assert that 96.5% of all AI Overviews appear for informational keywords — meaning very few overviews are created for transactional, navigational, and local searches. Informational questions are much easier and safer for AI to answer and will likely remain the dominant use case.

Others argue that AI Overviews keep low-performing traffic away from your site. For many years, Google has already been answering queries with information cards. When you Google a business, you are likely to get a card with the business name, phone number, web address, and even a map with their location. Popular businesses might include reviews and specific details like daily open hours. These information cards have already been taking traffic away from your site. But was that the traffic that you wanted?

These folks argue, if the searcher just wanted to know an answer to a question they had while having a conversation with a friend, they would have come to your site for that information and then left. Their visit would have counted as a bounce and negatively affected your monthly traffic data. Same with the ones that just needed a phone number or wanted to know what time you close. They would have come to your website for that one thing and then left.

Google’s own research says that when people use AI Overviews to start understanding a topic, they end up searching more frequently and express higher satisfaction with the results. Their position is that these overviews scratch the surface and help visitors ask more in-depth follow-up questions. Other recent studies have found that click-through increased for companies featured in AI Overviews, while those without an AI Overview lost traffic.

One thing is for sure: AI Overviews’ prominent position at the top of the results have pushed down organic results and made it harder for high-ranking organic websites to get noticed.

Takeaway:

Mixed. Yes, it is possible that AI Overviews are preventing click-through. It is also possible this traffic was not going to convert. And depending on your product and position in the market, AI Overviews might drive slightly more traffic than organic search. Either way, the result is an even more competitive search landscape than before.

Should I optimize my content for AI Overviews?

The most obvious next question is “How can my brand rank for AI Overviews?” While this is an important question, remember that AI Overviews often include citations from multiple sources. So while your business may rank for an overview, it is likely not going to be alone.

The answer to this question is more of the same things you should already know. In order to rank highly, you should:

- Follow SEO best practices

- Be authentic (leverage first-hand experiences like anecdotes, reference data sources, and be as unique as possible with your perspective)

- Anticipate next steps (what does someone need to learn and in which order)

- Use structured data (schema, JSON, etc.)

- Include multimedia (images, video, gifs)

Lots of SEO companies want to help your business rank, and AI Overviews is the next frontier. But from all the articles we have reviewed (and there were many), the same best practices apply — there are no shortcuts to great content.

Takeaway

Yes, optimize your content for AI Overviews, but this does not mean you need to do more than what you are already doing. To be a highly quoted source within your industry has benefits for brand recognition and trust, but just like long-tail keywords, these searches may have low volume. In the end, it is an investment vs. return question. There is a significant overlap between the sources cited in AI Overviews and the top organic search results, therefore, if your site already ranks well, you can’t do much more to get into an AI Overview.

Should I continue investing in SEO for Google?

Some clients worry that Google will be unseated as the dominant search engine now that tools like OpenAI’s ChatGPT have seen an explosion of millions of users. While these tools are indeed experiencing hockey-stick growth, Google completely dominates search volume.

SparkToro charted a 20% growth in search queries for Google in 2024, and crunched the numbers to conclude Google receives 373 times more searches than ChatGPT.

To put that into context, Google handles 14 billion searches per day. The next closest competitor is Bing search with 613.5 million per day, followed by Yahoo, DuckDuckGo, and then Chat GPT. In other words, your investment would see a larger return if your team optimized content for Bing.com than for ChatGPT.

These numbers are fresh from March 2025. Things can change, of course, but AI tools are not used only for search, have a relatively small market share, and do not get used daily. They suffer from not being the default tool at hand, which for most people, is a web browser. Google remains synonymous with search for a large percent of the population.

Takeaway

Yes, continue to invest in SEO for Google specifically. Google is still the biggest player in the search market, and their share is gaining, not decreasing (yet).

If we don’t implement structured data, are we losing out on AI crawler traffic?

Structured data is great for all SEO, so actually, you should implement structured data like Schema.org for across-the-board SEO value.

For those of you using Google Tag Manager (GTM), you might know that you get some structured data for free. When a Googlebot crawls your site, it includes structured JSON data that it creates client-side, which means that Google gets the structured data but it is inaccessible to any other crawler. If the data existed server-side, other bots could access it.

Most non-Google robot crawlers do not execute Javascript, therefore, they miss out on anything rendered in the browser. These crawlers include Bing, Yahoo, ChatGPT, Claude, and Perplexity. So again, server-side structured data would benefit all the search engine crawlers that are not Google.

But do LLMs really need structured data?

Large Language Models (LLMs) use statistical analysis to predict what word will follow the previous set of words. They do not understand language as much as they can mathematically reproduce its patterns. Therefore, they create structure from unstructured data all the time.

But while LLMs process and understand unstructured text, providing structured data would significantly help interpret and categorize your content effectively and accurately.

Takeaway

The short answer is no, LLMs do not require structured data to create meaningful connections between content and search intent. But structured data would help them and any other search service to correctly label, tag, and organize your data. The longer answer is an investment in structured metadata would pay off in dividends for all search engines and crawlers.

How can we prepare for SEO’s evolving future?

In mid-2024, when Google first introduced AI Overviews, some in the SEO/SERP industry claimed sites could lose up to 25% of their traffic. That has not come to pass, with some sites reporting as high as 12% and others lows of 8.9% and 2.6% — not insignificant, but lower than expected. And the data is still coming in, with others reporting increases in traffic with specific kinds of intent.

While AI increasingly shapes search results, content strategy will need to shift for sites to remain visible and relevant. High-quality, authoritative, and authentic content that offers depth, accuracy, and unique insights is still valuable currency. AI algorithms are designed to identify and prioritize quality, trustworthy, and well-researched content for inclusion in their summaries.

Sites should continue to target long-tail and question-based keywords to align content with visitor’s increase in natural language queries. This type of content is often more challenging for AI to fully synthesize and may still necessitate user click-through for a comprehensive understanding. Going deeper to investigate specific intents behind longer conversational queries could also be crucial for attracting relevant traffic.

Finally, diversifying content formats by incorporating video, infographics, and interactive elements will continue to enhance engagement and provide unique value that text-based AI summaries don’t fully replicate. And optimizing content for featured snippets remains important, as appearing in these snippets increases the likelihood of a website’s content being cited within AI Overviews.

Takeaway

The fundamentals of great content and best-practice SEO has not changed as dramatically as the tools that crawl your site and serve your content have.

Final Thoughts

Anything in the tech space evolves rapidly, and SEO is no exception. While the methods and the tools we leverage might change, the fundamentals remain strong. Keep doing what you have been doing, keep being curious, and keep asking these important questions of those in your circle whom you trust. We’re all figuring these things out in real time and can benefit from each other’s expertise.

If you have in-depth questions about SEO, content management, and the evolving AI-powered landscape, reach out to our team and we’ll always do our best to answer them thoughtfully and from multiple angles.

AI disclaimer: Google’s Deep Research was used for initial exploration and source gathering. All sources cited in this article were reviewed by the author. ChatGPT was used for follow up questions, as well as AI Overviews for examples of common questions. This article synthesizes these sources and was written by a human.

It’s no secret: Higher education institutions are complex.

Between multiple campuses, multiple audiences, and a high volume of content, higher ed marketing and communications teams have a ton to juggle.

And that’s before you throw a new website into the mix.

Not long ago, the team at Oomph partnered with the University of Colorado (CU) and Keene State College (KSC) to redesign sites for each institution. While their asks – and end products – were unique, the processes had a lot in common. So much so that we’re peeling back the curtain on our discovery process to give other higher ed institutions the tools to deliver websites that meet business goals and audience needs.

In this article, we’re turning our lessons learned into a discovery playbook that can help higher education institutions set the stage for a successful site redesign.

The Projects

University of Colorado Giving Platform

The University of Colorado has an active and engaged alumni network that loves supporting all things CU. The university came to Oomph because it needed a donor funds platform that could keep up. The goals of the discovery process were to:

- Document existing conditions, strengths and weaknesses, and stakeholder perceptions.

- Compare the strengths and weaknesses of the current site to similar ones from other colleges and universities.

- Refocus and align on core audiences that visit the site.

- Recommend ways to improve and enhance existing content and storytelling.

- Evaluate and make recommendations for a modern technology stack

While CU had a gut feeling about what it would take to meet internal expectations and keep prospective donors happy, gut feelings aren’t enough to build a website. CU knew that a professional perspective and data-backed analysis would lay a firm foundation for the site redesign.

Keene State College Main Website

KSC, a public liberal arts college in New Hampshire, wanted a refreshed main website that would resonate with prospective students, current students, and alumni alike. For KSC, key goals during discovery were to:

- Better understand the needs and more deeply define their primary audience: prospective students.

- Identify navigation patterns that would make it easier for students and their parents to find the content they need.

- Define design styles that create a more welcoming website experience and support storytelling during the future redesign.

The team came to Oomph with ideas but wanted research validation and guidance to nurture those ideas into a strategic design plan.

The Approach

For both projects, Oomph utilized our in-depth discovery process to validate assumptions, clarify priorities, and gain buy-in across the organizations.

KSC and CU both had a good sense of the work they needed to be done. But having a feel for the floorplan doesn’t mean you’re ready to build your dream house. Whether it’s a home or a website, both projects need an architect: an experienced professional who can consider all the requirements and create a strategic framework that’s able to support them. That work should happen before deciding what paint to put on the walls.

In our initial review, Oomph found that both sites had similar challenges: They struggled to focus on one key audience and to easily guide users through the site to the desired content. Our question was: How do we solve those struggles in a new website?

To answer it, we led KSC and CU through discovery processes that included:

- An intake questionnaire and live sessions with key stakeholders to understand the goals and challenges holding the current sites back.

- Defining strengths and areas for improvement with methods like a UX audit, a content and analytics audit, and a cohort analysis.

- Creating user journey maps that rolled audience, website, and competitive insights into a unified vision for the new user experience.

- Delivering a final set of data-backed recommendations that translated needs and wants into actionable next steps, equipping both teams to secure organizational approval and move the projects forward.

The Insights

Discovery isn’t a one-size-fits-all. However, our experiences with KSC and CU highlighted some common challenges that many higher-ed institutions face. Our insights from these projects offer a starting point for other institutions kicking off their own website redesigns.

- Start with your audience’s needs.

Who is your primary audience? Figure out who they are, then really drill down on their needs, preferences, and desired actions. This can be uniquely challenging for higher education institutions because they serve such a wide range of people.

Data is how you overcome that hurdle. As part of discovery, we:

- Completed a UX audit with tools like the BASIC heuristics framework to see which parts of the user flow are working and which need an overhaul.

- Rounded out those findings with a content audit to understand how users engaged with the site content.

- Completed a cohort analysis to see how the sites stacked up against their higher-ed peers.

When you do that work, you get a birds-eye view into what your audience really needs – and what it’ll take for your website to be up to the challenge.

Take KSC. The existing site attempted to serve multiple audiences, creating a user journey that looked like this:

We identified a primary audience of prospective students, specifically local high school students and their parents/guardians. When you speak directly to those students, you get a simpler, cleaner user journey that looks like this:

- Define organized and clear navigation to support user journeys.

Your navigation is like a map. When all the right roads are in place, it should help your users get where they want to go.

That makes your navigation the starting point for your redesign. Your goal is to define where content will live, the actions users can take upon arrival, and, equally important, the content they won’t see at first. This then informs what goes where – the header nav, the footer nav, or the utility nav – because each has unique and complementary purposes.

With both KSC and CU, discovery was our opportunity to start building a navigation that would serve the primary audience we had already uncovered. For CU, the current navigation:

- Made it difficult for donors to find what they need. Search, Write-In Fund, and “Page Not Found” were among the top 20 most visited pages, indicating that users weren’t easily finding their way.

- Didn’t engage donors, which showed up in the low number of returning visits.

- Needed to help donors more quickly find what they need without having to search the entire site.

Current CU navigation:

During discovery, we created an updated navigation that would appeal to its primary audience of prospective donors, while still meeting the needs of secondary audiences (returning donors and giving professionals).

Proposed CU navigation:

- Find an optimal blend of branding, design, and content – especially for the home page and other high-visibility areas.

The design and content you choose for your site should resonate with your target audience and enhance the navigation you already defined. In that way, your home page is like your storefront. What will you put on the sign or display in the windows so people will actually walk inside?

That’s the secret sauce behind this part of discovery: deciding what your primary audience really needs to know and how best to showcase it.

To help KSC speak to prospective students, we recommended:

- Using movement, color, and text styles to add visual impact to important content and create a welcoming, curated experience.

- Prioritizing diversity in visuals, so wide a range of students could picture themselves as part of the student body.

- Using stats and testimonials to build trust and foster a feeling of belonging.

CU wanted to connect with prospective donors, so we suggested a design that:

- Incorporated more storytelling and impact stories to show the positive ripple effect of every dollar donated.

- Included strategic calls to action to pull users deeper into the site.

- Added social sharing to enable donors to highlight their generosity and feel a deeper sense of connection.

- Probe additional areas where needed (and skip where it’s not).

Our hot take: There is such a thing as too much data. If you’re wading through pools of information that isn’t relevant to your end user, it can muddy the waters and make it harder to identify what’s worth acting on.

With that in mind, this step will change from project to project. Ask yourself, what else does my audience need to feel like they got what they came for?

For KSC, that involved additional strategy work – like the information architecture – that helped the institution gear up for later design phases. CU, on the other hand, needed significant technical discovery to address the level of custom code required, limited page building capabilities and clunky e-commerce integration. We recommended an updated tech stack, including a new donation platform and payment gateway, that would improve security, simplify maintenance, and enhance the user experience.

- Plan for a system that allows for easy updates later.

As they say, the only constant is change. This rings especially true for higher ed institution websites, where content is plentiful and multiple stakeholders need to make site updates.

To make sure CU and KSC’s sites continued to work for them long after our projects had ended, our discovery included suggestions around:

- Choosing the right content management system (CMS). We ensured each site had CMS capabilities that would allow non-tech-savvy team members to maintain and update the content.

- Establish design systems and templates for ease and consistency. For CU, that involved creating repeatable page layouts for their fund pages for simpler authoring and visual polish.

Start Your Redesign on the Right Foot

A thorough, well-researched, and well-organized discovery is key for designing a website that meets all of your – and your audience’s – needs.

Need a fresh perspective on your higher ed site redesign? Let’s talk about it.

There’s a new acronym on the block: MACH (pronounced “mock”) architecture.

But like X is to Twitter, MACH is more a rebrand than a reinvention. In fact, you’re probably already familiar with the M, A, C, and H and may even use them across your digital properties. While we’ve been helping our clients implement aspects of MACH architecture for years, organizations like the MACH Alliance have recently formed in an attempt to provide clearer definition around the approach, as well as to align their service offerings with the technologies at hand.

One thing we’ve learned at Oomph after years of working with these technologies? It isn’t an all-or-nothing proposition. There are many degrees of MACH adoption, and how far you go depends on your organization and its unique needs.

But first, you need to know what MACH architecture is, why it’s great (and when it’s not), and how to get started.

What Is MACH?

MACH is an approach to designing, building, and testing agile digital systems — particularly websites. It stands for microservices, APIs, cloud-native, and headless.

Like a composable business, MACH unites a few tried-and-true components into a single, seamless framework for building modern digital systems.

The components of MACH architecture are:

- Microservices: Many online features and functions can be separated into more specific tasks, or microservices. Modern web apps often rely on specialized vendors to offer individual services, like sending emails, authenticating users, or completing transactions, rather than a single provider to rule them all.

- APIs: Microservices interact with a website through APIs, or application programming interfaces. This allows developers to change the site’s architecture without impacting the applications that use APIs and easily offer those APIs to their customers.

- Cloud-Native: A cloud-based environment hosts websites and applications via the Internet, ensuring scalability and performance. Modern cloud technology like Kubernetes, containers, and virtual machines keep applications consistent while meeting the demands of your users.

- Headless: Modern Javascript frameworks like Next.js and Gatsby empower intuitive front ends that can be coupled with a variety of back-end content management systems, like Drupal and WordPress. This gives administrators the authoring power they want without impacting end users’ experience.

Are You Already MACHing?

Even if the term MACH is new to you, chances are good that you’re already doing some version of it. Here are some telltale signs:

- You have one vendor for single sign-on (SSO), one vendor to capture payment information, another to handle email payment confirmations, and so on.

- You use APIs to integrate with tech solutions like Hubspot, Salesforce, PayPal, and more.

- Your website — or any website feature or application — is deployed within a cloud environment.

- Your website’s front end is managed by a different vendor than its back end.

If you’re doing any of the above, you’re MACHing. But the magic of MACH is in bringing them all together, and there are plenty of reasons why companies are taking the leap.

5 Benefits of MACH Architecture

If you make the transition to MACH, you can expect:

- Choice: Organizations that use MACH don’t have to settle for one provider that’s “good enough” for the countless services websites need. Instead, they can choose the best vendor for the job. For example, when Oomph worked with One Percent for America to build a platform offering low-interest loans to immigrants pursuing citizenship, that meant leveraging the Salesforce CRM for loan approvals, while choosing “Click and Pledge” for donations and credit card transactions.

- Flexibility: MACH architecture’s modular nature allows you to select and integrate individual components more easily and seamlessly update or replace those components. Our client Leica, for example, was able to update its order fulfillment application with minimal impact to the rest of its Drupal site.

- Performance: Headless applications often run faster and are easier to test, so you can deploy knowing you’ve created an optimal user experience. For example, we used a decoupled architecture for our client Wingspans to create a stable, flexible, and scalable site with lightning-fast performance for its audience of young career-seekers.

- Security: Breaches are generally limited to individual features or components, keeping your entire system more secure.

- Future-Proofing: A MACH system scales easily because each service is individually configured, making it easier to keep up with technologies and trends and avoid becoming out-of-date.

5 Drawbacks of MACH Architecture

As beneficial as MACH architecture can be, making the switch isn’t always smooth sailing. Before deciding to adopt MACH, consider these potential pitfalls.

- Complexity: With MACH architecture, you’ll have more vendors — sometimes a lot more — than if you run everything on one enterprise system. That’s more relationships to manage and more training needed for your employees, which can complicate development, testing, deployment, and overall system understanding.

- Challenges With Data Parity: Following data and transactions across multiple microservices can be tricky. You may encounter synchronization issues as you get your system dialed in, which can frustrate your customers and the team maintaining your website.

- Security: You read that right — security is a potential pro and a con with MACH, depending on your risk tolerance. While your whole site is less likely to go down with MACH, working with more vendors leaves you more vulnerable to breaches for specific services.

- Technological Mishaps: As you explore new solutions for specific services, you’ll often start to use newer and less proven technologies. While some solutions will be a home run, you may also have a few misses.

- Complicated Pricing: Instead of paying one price tag for an enterprise system, MACH means buying multiple subscriptions that can fluctuate more in price. This, coupled with the increased overhead of operating a MACH-based website, can burden your budget.

Is MACH Architecture Right for You?

In our experience, most brands could benefit from at least a little bit of MACH. Some of our clients are taking a MACH-lite approach with a few services or apps, while others have adopted a more comprehensive MACH architecture.

Whether MACH is the right move for you depends on your:

- Platform Size and Complexity: Smaller brands with tight budgets and simple websites may not need a full-on MACH approach. But if you’re managing content across multiple sites and apps, managing a high volume of communications and transactions, and need to iterate quickly to keep up with rapid growth, MACH is often the way to go.

- Level of Security: If you’re in a highly regulated industry and need things locked down, you may be better off with a single enterprise system than a multi-vendor MACH solution.

- ROI Needs: If it’s time to replace your system anyway, or you’re struggling with internal costs and the diminishing value of your current setup, it may be time to consider MACH.

- Organizational Structure: If different teams are responsible for distinct business functions, MACH may be a good fit.

How To Implement MACH Architecture

If any of the above scenarios apply to your organization, you’re probably anxious to give MACH a go. But a solid MACH architecture doesn’t happen overnight. We recommend starting with a technology audit: a systematic, data-driven review of your current system and its limitations.

We recently partnered with career platform Wingspans to modernize its website. Below is an example of the audit and the output: a seamless and responsive MACH architecture.

The Audit

- Surveys/Questionnaires: We started with some simple questions about Wingspan’s website, including what was working, what wasn’t, and the team’s reasons for updating. They shared that they wanted to offer their users a more modern experience.

- Stakeholder Interviews: We used insights from the surveys to spark more in-depth discussions with team members close to the website. Through conversation, we uncovered that website performance and speed were their users’ primary pain points.

- Systems Access and Audit: Then, we took a peek under the hood. Wingspans had already shared its poor experiences with previous vendors and applications, so we wanted to uncover simpler ways to improve site speed and performance.

- Organizational Structure: Understanding how the organization functions helps design a system to meet those needs. The Wingspans team was excited about modern technology and relatively savvy, but they also needed a system that could accommodate thousands of authenticated community members.

- Marketing Plan Review: We also wanted to understand how Wingspans would talk about their website. They sought an “app-like” experience with super-fast search, which gave us insight into how their MACH system needed to function.

- Roadmap: Wingspans had a rapid go-to-market timeline. We simplified our typical roadmap to meet that goal, knowing that MACH architecture would be easy to update down the road.

- Delivery: We recommended Wingspans deploy as a headless site (a site we later developed for them), with documentation we could hand off to their design partner.

The Output

We later deployed Wingspans.com as a headless site using the following components of MACH architecture:

- Microservices: Wingspans leverages microservices like Algolia Search for site search, Amazon AWS for email sends and static site hosting, and Stripe for managing transactions.

- APIs: Wingspans.com communicates with the above microservices through simple APIs.

- Cloud-Native: The new website uses cloud-computing services like Google Firebase, which supports user authentication and data storage.

- Headless: Gatsby powers the front-end design, while Cosmic JS is the back-end content management system (CMS).

Let’s Talk MACH

As MACH evolves, the conversation around it will, too. Wondering which components may revolutionize your site and which to skip (for now)? Get in touch to set up your own technology audit.

Finding yourself bogged down with digital analytics? Spending hours just collecting and organizing information from your websites and apps? Looker Studio could be the answer to all your problems (well, maybe not all of them, but at least where data analytics are concerned).

This business intelligence tool from Google is designed to solve one of the biggest headaches out there for marketers: turning mountains of website data into actionable insights. Anyone who’s ever gone down the proverbial rabbit hole scouring Google Analytics for the right metrics or manually inputting numbers from a spreadsheet into their business intelligence platform knows that organizing this data is no small task. With Looker Studio, you can consolidate and simplify complicated data, freeing up more time for actual analysis.

With so many customizable features and templates, it does take time to set up a Looker Studio report that works for you. Since Google’s recent switch from Universal Analytics to Google Analytics 4, you might also find that certain Looker Studio reports aren’t working the way they used to.

Not to worry: Our Oomph engineers help clients configure and analyze data with Looker Studio every day, and we’ve learned a few tips along the way. Here’s what to know to make Looker Studio work for your business.

The benefits of using Looker Studio for data visualization and analysis

Formerly known as Google Data Studio, Looker Studio pulls, organizes, and visualizes data in one unified reporting experience. For marketers who rely heavily on data to make informed decisions, Looker Studio can save precious time and energy, which you can then invest in analyzing and interpreting data.

Key benefits of using Looker Studio include:

- Connecting data from multiple sources: The platform can unify data from a mind-boggling 800+ sources and 600+ data connectors, which means you can upload and integrate multiple data sets into one comprehensive report. This not only saves time, but also provides more accurate insights into business performance for organizations with complex digital environments.

- User-friendly insights: Looker Studio’s visual dashboards are easy to interpret, customize, and share – even with executives who might not be as digitally fluent as you. You can choose from a variety of drag-and-drop data visualization options, such as charts, graphs, and tables, or use Looker Studio’s pre-designed visualizations.

- Powerful customization: Want to be informed the minute your form conversion rate changes? With Looker Studio, you can set up alerts to notify you of significant changes in your data, enabling you to adjust your marketing strategy and optimize ROI in real time. You can also generate reports to track your progress and share them with your clients or team members.

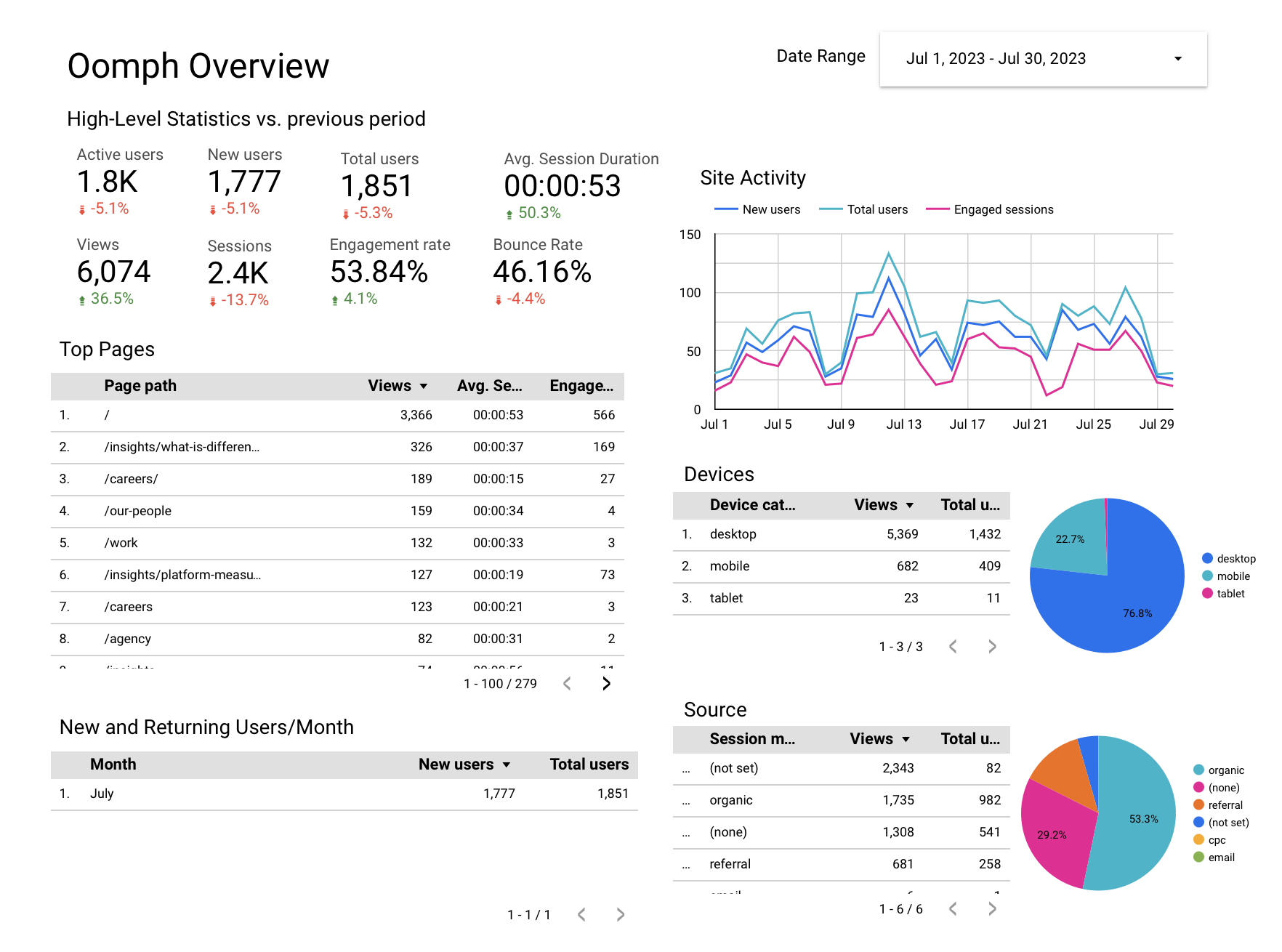

How Oomph uses Looker Studio

As a digital-first company in the business of helping other digital-first companies, we’re big fans of Looker Studio. We think the platform is a great way to share trends on your websites and apps in an easy-to-digest way, making monthly or quarterly reporting much more efficient.

Whether you’re looking for basic insights or need sophisticated analysis, Looker Studio’s visualization capabilities can support smarter, more informed digital decision-making. Here’s a peek at some of the metrics we monitor for our own business, including:

- Number of users

- New users versus returning users

- Average time spent on site and on page

- Top pages viewed

- Traffic sources

- Traffic by device

- Event tracking and conversion rates

We also use the platform to drill deeper, comparing trends over time, identifying seasonal fluctuations and assessing the performance of specific campaigns. We leverage features like dashboards and filters in Looker Studio to give our clients an interactive view of their data.

How Looker Studio Works With GA4

Google Analytics, now known as GA4, is one of the primary tools we connect to Looker Studio. GA4 is the latest version of Google’s popular analytics platform and offers new features and functionality compared with its predecessor, Universal Analytics (UA), including new data visualization capabilities.

As many companies migrate over to GA4, they may be wondering if reporting will be similar between GA4 and Looker Studio – and if you need both.

While GA4 reports may challenge Looker Studio’s capabilities, Looker Studio provides a variety of features that go beyond what GA4 can do on its own. While GA4 dashboards and reports just include GA4 data, Looker Studio can import data from other sources as well. This means you can use Looker Studio to track trends in your site’s performance, regardless of the data source.

Looker Studio also has a unique feature called “LookML,” which allows users to create custom data models and transformations. This means you can tailor your data to your specific needs, rather than being limited by GA4’s built-in reporting. Finally, Looker Studio’s robust sharing and collaboration features allow teams to share data and insights easily and efficiently.

If your company set up Looker Studio before switching to GA4, you may notice a few metrics are now out of sync. Here are a few adjustments to get everything working correctly:

- Average time on page: This was previously a standard feature in UA that’s no longer available in GA4. To configure, you’ll need to track the “user engagement” metric divided by “number of sessions.”

- Bounce rate: Tracking bounce rate with GA4 now takes an additional step as well. To configure, subtract the “engagement rate” metric from 1 to arrive at your bounce rate.

- Events: Simply update your Looker Studio connection settings to use the new GA4 event schema and ensure that you’re using the correct event names and parameters in your LookML code.

How To Set Up a Looker Studio Report

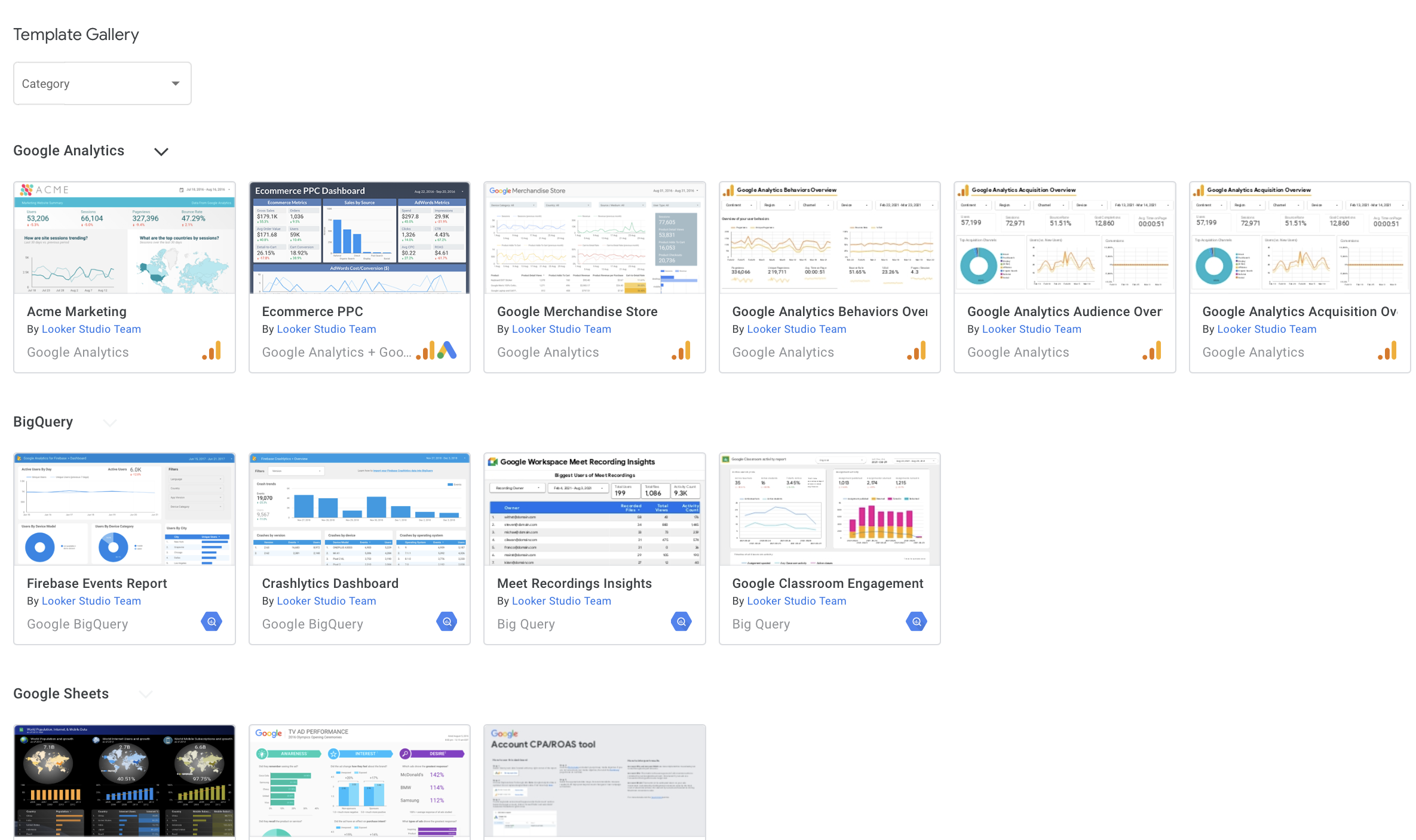

- Choose a template for your dashboard or create one from scratch. If you’re not sure, you can browse through templates to get an idea of what Looker can do.

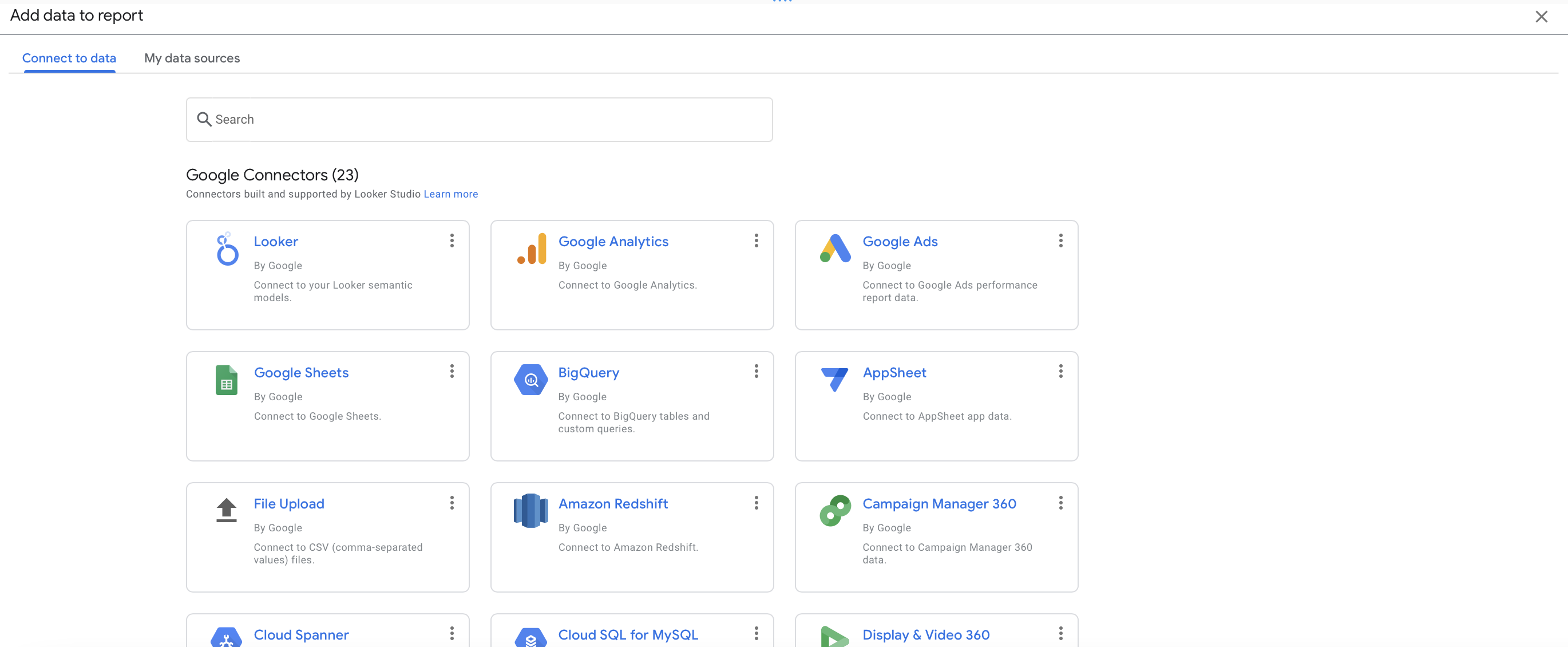

- Connect your data source. Looker supports a long list of sources, including Google, MySQL, AWS Redshift, and more. Don’t worry if your data isn’t in Google – Looker will likely be able to connect to it regardless.

- Choose your metrics. These are the specific data points you want to track and analyze in your report. You can customize your metrics to fit your specific needs.

- Build your dashboard. You can add charts, tables, and other visualizations to help you understand your data. Looker makes it easy to drag and drop these elements into place.

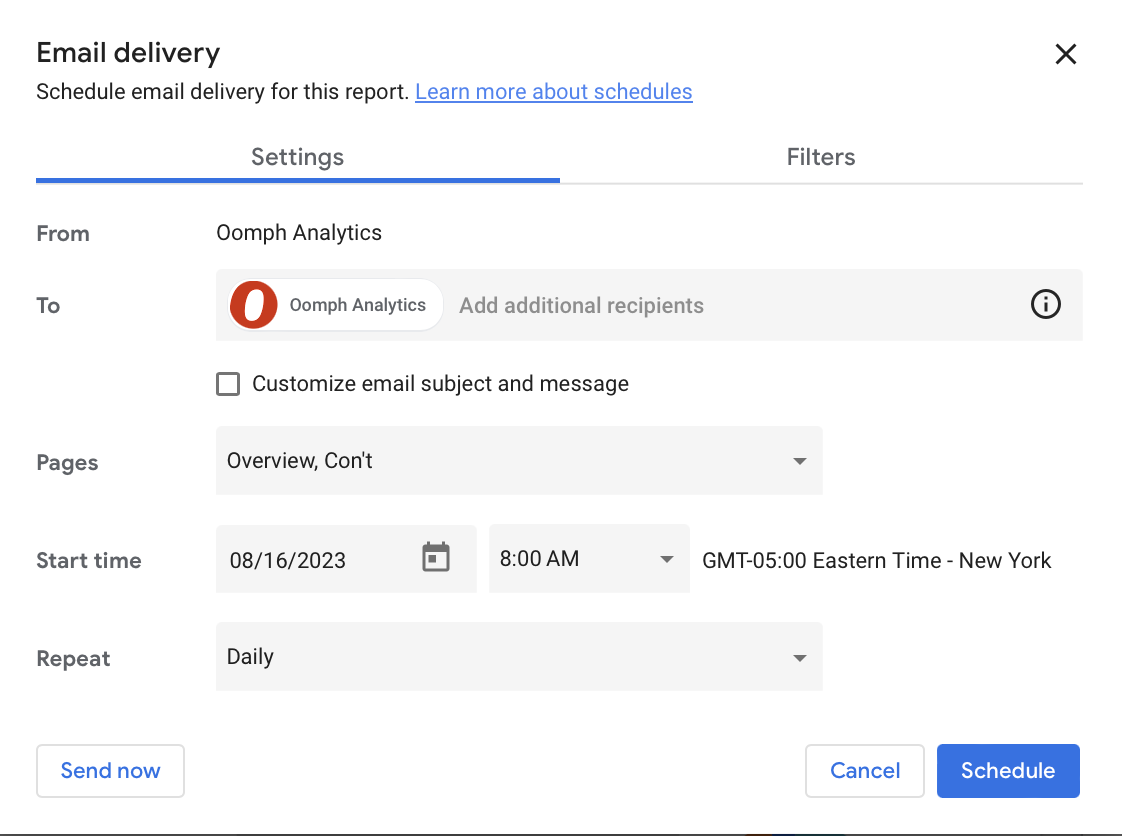

- Share it with others. You can either create a share link so that others can access the dashboard directly or you can set up automatic updates to be sent on a regular basis. This makes it easy for others to stay up-to-date on changes and progress.

A Powerful Path To Data Insights

The digital landscape is growing more fragmented and complex by the day, but tools like Looker Studio make it infinitely easier to find your path forward. Taking the time to configure and customize the platform can deliver major ROI by helping you understand user needs, pinpoint website strengths and challenges, and craft the right digital strategy.

Crunched for time or not sure where to start? Oomph can help take the hassle out of data analysis by setting up and monitoring your Looker Studio dashboards. Get in touch to chat about your needs.

Have you ever tried to buy tickets to a concert and experienced the frustration and eventual rage of waiting for pages to load, unresponsive pages, unclear next steps, timers counting down, or buttons not working to submit — and you probably still walked away with zero tickets? Yeah, you probably had some choice words, and your keyboard and mouse might have suffered your ire in the process.

As a website owner, you strive to create a seamless user experience for your audience. Ideally, one that doesn’t involve them preparing to star in their own version of the printer scene in Office Space. Despite your best efforts, there will be times when users get frustrated due to slow page loads, broken links, navigation loops, or any other technical issues. This frustration can lead to what the industry calls “rage clicks” and “thrashed cursors.” When your users are driven to these actions, your website’s reputation, engagement, and return visits can be damaged. Let’s dig in to discuss what rage clicks and thrashed cursors are and how to deal with frustrated users.

First of all, what are Rage Clicks?

Rage clicks are when a user repeatedly clicks on a button or link when it fails to respond immediately — the interface offers no feedback that their first click did something. This bad user experience doesn’t motivate them to return for more. These clicks are likely often accompanied by loud and audible sighs, groans, or even yelling. “Come on, just GO!” might ring a bell if you’ve ever been in this situation. Rage clicks are one of the most frustrating things a user can experience when using a website or app.

Rage Clicks are defined technically by establishing that:

- At least three clicks take place

- These three clicks happen within a two-second time frame

- All clicks occur within a 100px radius

Similarly, what is a Thrashed Cursor?

A thrashed cursor is when a user moves the cursor back and forth over a page or element, indicating impatience or confusion. Various issues, including slow page load times, broken links, unresponsive buttons, and unclear navigation, can cause users to exhibit these digital behaviors. It can also indicate the user is about to leave the site if they cannot find that solution quickly.

Thrashed cursors are defined technically by establishing that:

- There is an area on the page where a user was moving their mouse erratically

- An established pattern of “thrashing” occurs around or on specific elements or pages

- Higher rate of user exits from the identified pages

Why do Rage Clicks and Thrashed Cursor happen?

Common reasons rage clicks and thrashed cursors happen are:

- Poor Design: Poor design is one of the most common reasons for rage clicks and thrashed cursors. If the website has a confusing layout or navigation structure, it can be frustrating for users to find what they’re looking for. Or, they may assume an element is clickable; when it’s not, it can be irksome. Underlined text is an excellent example, as users often associate underlines with links.

- Technical Issues: Technical issues such as slow loading times, broken links, or non-responsive buttons can cause rage clicks and thrashed cursors. Users expect the website to work correctly; when it doesn’t, they can become annoyed or frustrated. If they click a button, they expect the button to do something.

- Lack of Clarity: If the website’s content is unclear or poorly written, it can cause confusion and frustration for users. They may struggle to understand the information provided or find it challenging to complete the intended action. Content loops can be a good example of this. Content loops happen when users repeatedly go back and forth between pages or sections of a website, trying to find the information they need. Eventually, they’ll become frustrated, leading to this user leaving the website.

How do you resolve issues that lead to Rage Clicks and Thrashed Cursors?

Now that we know what rage clicks and thrashed cursors are and why they happen, how do you resolve it, you may be asking. Here are a few things an agency partner can help you with that can significantly reduce the risk of your users resorting to these behaviors.

Use Performance Measuring Tools

By employing performance measuring, you can analyze the data collected, gain valuable insights into how users interact with your platform, and identify areas for improvement. For example, if you notice a high number of rage clicks on a specific button or link, it may indicate that users are confused about its functionality or that it’s not working correctly. Similarly, if you see a high number of thrashed cursors on a particular page, it may suggest that users are struggling to navigate or find the information they need.

Tools that support Friction or Frustration measurement:

- Clarity (from Microsoft)

- ContentSquare

- Heap

- HotJar

- Mouseflow

- Quantum Metric

Conduct User Experience Exercises and Testing

Identifying the root causes of rage clicks and thrashed cursors can be done through a UX audit. An agency can examine your website design, functionality, and usability, identifying areas of improvement.

- User Journey Mapping: User journey mapping involves mapping the user’s journey through your website from a starting point to a goal, identifying pain points along the way, and determining where users may get stuck or frustrated.

- Usability Testing: Usability testing involves putting the website in front of real users and giving them tasks to complete. The tester then looks to identify issues, such as slow loading times, broken links, or confusing navigation.

- User Surveys: User surveys can be conducted in various ways, including online surveys, in-person interviews, and focus groups. These surveys can be designed to gather information about users’ perceptions of the website, interactions with the website, and satisfaction levels. Questions can be designed to identify areas of frustration, such as difficult-to-find information, slow page load times, or confusing navigation. It’s wise to keep surveys short, so work with your agency to select the questions to garner the best feedback.

- Heat Mapping: Heat mapping involves analyzing user behavior on your website, identifying where users are clicking, scrolling, and spending their time. This can identify areas of the website that are causing frustration and leading to rage clicks and thrashed cursors.

Focus on Website Speed Optimization

A digital agency can synthesize findings from UX research and performance-measuring tools and work to optimize your website for quicker page loads and buttons or links that respond immediately to user actions.

- Image Optimization: Optimizing images on your website will significantly improve page loading times. An agency can help you optimize server settings and compress images to reduce their size without sacrificing quality.

- Minification: Minification involves reducing the size of HTML, CSS, and JavaScript files by removing unnecessary characters such as white space, comments, and line breaks. This can significantly improve page loading times.

- Caching: Caching involves storing frequently accessed website data on a user’s device, reducing the need for data retrieval and improving website speed.

- Content Delivery Network (CDN): A CDN is a network of servers distributed worldwide that store website data, improving website speed by reducing the distance between the user and the server.

- Server Optimization: Server optimization involves optimizing server settings and configurations, such as increasing server resources, using a faster server, and reducing request response time. Website owners frequently skip this step and don’t select the right hosting plan, which can cost more money through lost users and lower conversions.

Resolve Technical Issues

A web agency can help resolve any technical issues that may be causing frustration for your users. These issues may include broken links or buttons, 404 errors, slow page load times, and server errors. Technical issue resolution can involve various activities, including code optimization, server maintenance, and bug fixes that work to ensure that everything is working correctly and address any issues that arise promptly. The resolution of technical issues will improve website performance, reducing the likelihood of user frustration and rage clicks.

Next Steps

User frustration can negatively impact user satisfaction and business outcomes. Partnering with a digital agency can be a valuable investment to mitigate these issues. Through the use of tools, UX audits, user surveys, website speed optimization, and technical issue resolution, a digital agency can identify and address the root causes of user frustration, improving the overall user experience — leading to an increase in user engagement, satisfaction, and loyalty, which means improved conversion rates, higher customer retention, and ultimately, increased revenue for your business.

If your customers are hulking out, maybe it’s time to call us!

More than two years after Google announced the launch of its powerful new website analytics platform, Google Analytics 4 (GA4), the final countdown to make the switch is on.

GA4 will officially replace Google’s previous analytics platform, Universal Analytics (UA), on July 1, 2023. It’s the first major analytics update from Google since 2012 — and it’s a big deal. As we discussed in a blog post last year, GA4 uses big data and machine learning to provide a next-generation approach to measurement, including:

- Unifying data across multiple websites and apps

- A new focus on events vs. sessions

- Cookieless user tracking

- More personalized and predictive analytics

At Oomph, we’ve learned a thing or two about making the transition seamless while handling GA4 migrations for our clients – including a few platform “gotchas” that are definitely better to know in advance. Before you start your migration, do yourself a favor and explore our GA4 setup guide.

Your 12-Step GA4 Migration Checklist

Step 1: Create a GA4 Analytics Property and Implement Tagging

The Gist: Launch the GA4 setup assistant to create a new GA4 property for your site or app. For sites that already have UA installed, Google is beginning to create GA4 properties automatically for them beginning in March 2023 (unless you opt out). If you’re migrating from UA, you can connect your UA property to your GA4 property to use the existing Google tracking tag on your site. For new sites, you’ll need to add the tag directly to your site or via Google Tag Manager.

The Gotcha: During property setup, Google will ask you which data streams you’d like to add (websites, apps, etc…). This is simple if you’re just tracking one site, but gets more complex for organizations with multiple properties, like educational institutions or retailers with individual locations. While UA allowed you to separate data streams by geography or line of business, GA4 handles this differently. This Google guide can help you choose the ideal configuration for your business model.

Step 2: Update Your Data Retention Settings

The Gist: GA4 lets you control how long you retain data on users and events before it’s automatically deleted from Google’s servers. For user-level data, including conversions, you can hang on to data for up to 14 months. For other event data, you have the option to retain the information for 2 months or 14 months.

The Gotcha: The data retention limits are much shorter than UA, which allowed you to keep Google-signals data for up to 26 months in some cases. The default retention setting in GA4 is 2 months for some types of data – a surprisingly short window, in our opinion – so be sure to extend it to avoid data loss.

Step 3: Initialize BigQuery

The Gist: Have a lot of data to analyze? GA4 integrates with BigQuery, Google’s cloud-based data warehouse, so you can store historical data and run analyses on massive datasets. Google walks you through the steps here.

The Gotcha: Since GA4 has tight time limits on data retention as well as data limits on reporting , skipping this step could compromise your reporting. BigQuery is a helpful workaround for storing, analyzing and visualizing large amounts of complex data.

Step 4: Configure Enhanced Measurements

The Gist: GA4 measures much more than pageviews – you can now track actions like outbound link clicks, scrolls, and engagements with YouTube videos automatically through the platform. When you set up GA4, simply check the box for any metrics you want GA4 to monitor. You can still use Google tags to customize tracking for other types of events or use Google’s Measurement Protocol for advanced tracking.

The Gotcha: If you were previously measuring events through Google tags that GA4 will now measure automatically, take the time to review which ones to keep to avoid duplicating efforts. It may be simpler to use GA4 tracking – giving you a good reason to do that Google Tag Manager cleanup you’ve been meaning to get to.

Step 5: Configure Internal and Developer Traffic Settings

The Gist: To avoid having employees or IT teams cloud your insights, set up filters for internal and developer traffic. You can create up to 10 filters per property.

The Gotcha: Setting up filters for these users is only the first step – you’ll also need to toggle the filter to “Active” for it to take effect (a step that didn’t exist in UA). Make sure to turn yours on for accurate reporting.

Step 6: Migrate Users

The Gist: If you were previously using UA, you’ll need to migrate your users and their permission settings to GA4. Google has a step-by-step guide for migrating users.

The Gotcha: Migrating users is a little more complex than just clicking a button. You’ll need to install the GA4 Migrator from Google Analytics add-on, then decide how to migrate each user from UA. You also have the option to add users manually.

Step 7: Migrate Custom Events

The Gist: Event tracking has fundamentally changed in GA4. While UA offered three default parameters for events (eventcategory, action, and eventlabel), GA4 lets you create any custom conventions you’d like. With more options at your fingertips, it’s a great opportunity to think through your overall measurement approach and which data is truly useful for your business intelligence.

When mapping UA events to GA4, look first to see if GA4 is collecting the data as an enhanced measurement, automatically collected, or recommended event. If not, you can create your own custom event using custom definitions. Google has the details for mapping events.

The Gotcha: Don’t go overboard creating custom definitions – GA4 limits you to 50 per property.

Step 8: Migrate Custom Filters to Insights

The Gist: Custom filters in UA have become Insights in GA4. The platform offers two types of insights: automated insights based on unusual changes or emerging trends, and custom insights based on conditions that matter to you. As you implement GA4, you can set up custom insights for Google to display on your Insights dashboard. Google will also email alerts upon request.

The Gotcha: Similar to custom events, GA4 limits you to 50 custom insights per property.

Step 9: Migrate Your Segments

The Gist: Segments work differently in GA4 than they do in UA. In GA4, you’ll only find segments in Explorations. The good news is you can now set up segments for events, allowing you to segment data based on user behavior as well as more traditional segments like user geography or demographics.

The Gotcha: Each Exploration has a limit of 10 segments. If you’re using a lot of segments currently in UA, you’ll likely need to create individual reports to see data for each segment. While you can also create comparisons in reports for data subsets, those are even more limited at just four comparisons per report.

Step 10: Migrate Your Audiences

The Gist: Just like UA, GA4 allows you to set up audiences to explore trends among specific user groups. To migrate your audiences from one platform to another, you’ll need to manually create each audience in GA4.

The Gotcha: You can create a maximum of 100 audiences for each GA4 property (starting to sense a theme here?). Also, keep in mind that GA4 audiences don’t apply retroactively. While Google will provide information on users in the last 30 days who meet your audience criteria — for example, visitors from California who donated more than $100 — it won’t apply the audience filter to users earlier than that.

Step 11: Migrate Goals to Conversion Events

The Gist: If you were previously tracking goals in UA, you’ll need to migrate them over to GA4, where they’re now called conversion events. GA4 has a goals migration tool that makes this process pretty simple.

The Gotcha: GA4 limits you to 30 custom conversion events per property. If you’re in e-commerce or another industry with complex marketing needs, those 30 conversion events will add up very quickly. With GA4, it will be important to review conversion events regularly and retire ones that aren’t relevant anymore, like conversions for previous campaigns.

Step 12: Migrate Alerts

The Gist: Using custom alerts in UA? As we covered in Step 8, you can now set up custom insights to keep tabs on key changes in user activity. GA4 will deliver alerts through your Insights dashboard or email, based on your preferences.

The Gotcha: This one is actually more of a bonus – GA4 will now evaluate your data hourly, so you can learn about and respond to changes more quickly.

The Future of Measurement Is Here

GA4 is already transforming how brands think about measurement and user insights – and it’s only the beginning. While Google has been tight-lipped about the GA4 roadmap, we can likely expect even more enhancements and capabilities in the not-too-distant future. The sooner you make the transition to GA4, the sooner you’ll have access to a new level of intelligence to shape your digital roadmap and business decisions.

Need a hand getting started? We’re here to help – reach out to book a chat with us.

Was this blog written by ChatGPT? How would you really know? And what impact would it have on Oomph’s site if it were?

Yes, we know there are some great AI-detecting tools out there. But for the typical reader, picking an AI article out of a crowd can be challenging. And with AI tools like ChatGPT delivering better-quality results than ever, many companies are struggling to decide whether to hand their content and SEO reins over to the machines.

While AI can add value to your content, companies should proceed with caution to avoid some potentially big pitfalls. Here’s why.

Quality Content Is Critical to SEO

All the way back in 1996, Bill Gates said “Content is King.” This phrase became ubiquitous in the early years of SEO. At that time, you could rank well simply by writing about a search topic, then optimizing your writing with the right keywords.

Since then, search algorithms have evolved, and the Google search engine results page (SERP) is more crowded than ever (not to mention the new continuous scroll). While ranking isn’t as easy as it used to be, content — whether it’s a video, an image, a product, a blog, or a news story — still matters. When content ranks well, it’s an ad-spend-free magnet for readers that eventually become customers and subscribers. What else on your website can do that?

That makes your content special. It also puts a premium on producing a high volume of relevant content quickly. For years, brands have done this the old-fashioned way: with copywriters and designers researching, writing, revising, creating images, and publishing ad infinitum.

Until AI.

AI-Powered Content Generation Changes How We Make Content

There’s no point in denying it: AI will impact SEO. But it’s still up for debate just how deep that impact will be.

The rise of AI-powered language processing tools like ChatGPT and Meta’s Llama makes quick content generation a reality. They can easily produce high-quality content that will likely only get better with time. ChatGPT can produce an article in minutes, not hours, and even suggest keywords for you.

For all those reasons, marketers have embraced these new tools — ChatGPT shattered records when it reached 100 million daily active users in a mere two months. As the saying goes, though, just because we can, doesn’t mean we should — especially if using it means compromising on quality or losing what makes us, well, us.

After all, AI is a tool, not a human replacement. If you’re considering integrating AI into your martech stack, here’s what to keep in mind.

4 Things to Know Before Using AI for Content

1. Google may ding you.

In August 2022, Google released an algorithm update called the “helpful content update.” You can read more on Google’s blog, but what’s important is this: Google prioritizes content by people, for people.

In other words, using AI solely to churn out articles that feed the search engine beast won’t work. You should continue to create content that puts your audience first, is genuinely helpful, and is 100% unique to your brand.

But Google’s stance does beg the question: Will they really know if you used AI to generate your content? Well, they do have a very special set of skills…

While AI content can be good quality and easy to read, tools like Crossplag’s AI Content Detector and OpenAI’s GPT-2 Output Detector can detect whether something is written by a human or by an AI bot. That comes down, in part, to the fact that AI lacks EEAT – experience, expertise, authority, and trust – which is not just a major factor in SEO, but a major reason why people consume content in the first place.

2. AI isn’t always accurate.

AI culls information from across the web, and it’s remarkably good at it. But aggregating massive amounts of information isn’t the same as fact-checking. AI tools often get it wrong – even with easily verifiable, fact-based questions.

Take the question: What is the largest country in Central America that isn’t Mexico?

AI’s answer:

The largest country in Central America that isn’t Mexico is Guatemala. Guatemala has an area of about 108,890 square kilometers, making it the largest country in Central America by land area. It is bordered by Mexico to the north, Belize to the northeast, Honduras to the east, El Salvador to the southeast, and the Pacific Ocean to the south.

Google’s answer:

Nicaragua is the largest country in the subregion, with a total area of over 130 thousand square kilometers, followed by Honduras, with more than 112 thousand square kilometers.

(Hint: Google is right)

This is a problem for your business and for your SEO. Accuracy is an important part of EEAT. It’s especially critical for “your money or your life” (YMYL) content, like financial or medical advice. In these cases, the content you publish can and does impact real people’s lives and livelihoods.

Spotty accuracy has even prompted some sites, like StackOverflow, to ban AI-generated content.

3. You don’t have the rights to your AI-generated content.

AI-generated content isn’t actually copyrightable. Yes, you read that right.

As it stands, the courts have interpreted the Copyright Act to mean that only human-authored works can be copyrighted. Something is only legally defensible when it involves at least a minimal amount of creativity.

We’re all familiar with this concept when it comes to books, TV shows, movies, and paintings, but it matters for your website, too. You want your content and your ideas to be yours. If you use AI-generated content, be aware that it isn’t subject to standard intellectual property rules and may not be protected.

4. AI-generated content can’t capture your voice.

Even if you fly under Google’s radar with your AI content, it still won’t really feel like you. You are the only you. We know that sounds like it belongs on an inspirational poster, but it’s true. Your voice is what readers will connect with, believe in, and ultimately trust.

Sure, AI may succeed at stringing together facts and keywords to create content that ranks. And that content may even drive people to your site. But it lacks the emotional intelligence to infuse your content with real-life examples and anecdotes that make readers more likely to read, share, and engage with your content and your brand.

Your voice is also what sets you apart from other brands in your industry. Without that, why would a customer choose you?

AI and SEO Is a Journey, Not a Destination

AI is not the end of human-driven SEO. In reality, AI has only just arrived. But the real opportunity lies in finding out how AI can enhance, not replace, our work to create winning SEO content.

Think about content translation. Hand translation is the most premium translation option out there. It’s also costly. While machine translation on its own can be a bit of a mess, many translation companies actually start with an automated solution, then bring in the humans to polish that first translation into a final product. If you ask us, AI and SEO will work in much the same way.

Even in a post-AI world, SEO all comes down to this guidance from Google:

“If it is useful, helpful, original, and satisfies aspects of E-E-A-T, it might do well in Search. If it doesn’t, it might not.”

If and when you do decide to leverage AI, keep these tips in mind:

- Use AI to generate ideas, not create finished products. Asking ChatGPT to provide five industry trends that you turn into a series of articles is one thing; cutting and pasting a full AI-generated article onto your website is another.

- Fact-check anything and everything AI tells you. + Infuse your brand into every piece of AI-generated copy. Personal stories, insights, and anecdotes are what makes great content great.

At Oomph, we believe quality branded content is just one component of a digital experience that engages and inspires your audience.

Need help integrating SEO content into your company’s website? Let’s talk.

There’s a phrase often used to gauge healthcare quality: the right care, at the right time, in the right place. When those elements are out of sync, the patient experience can take a turn for the worse. Think about missed appointments, misunderstood pre-op instructions, mismanagement of medication… all issues that require clear and timely communication to ensure positive outcomes.

Many healthcare organizations are tapping into patient engagement tools that use artificial intelligence (AI) to drive better healthcare experiences. In this article, we’ll cover a number of use cases for AI within healthcare, showing how it can benefit providers, their patients, and their staff in an increasingly digital world.

Healthcare Consumers are Going Digital

Use of AI in the clinical space has been growing for years, from Google’s AI aiding diagnostic screenings to IBM’s Watson AI informing clinical decision making. But there are many other touchpoints along a patient’s continuum of care that can impact patient outcomes.

The industry is seeing a shift towards more personalized and data-driven patient engagement, with recent studies showing that patients are ready to integrate AI and other digital tools into their healthcare experiences.

For instance, healthcare consumers are increasingly comfortable with doctors using AI to make better decisions about their care. They also want personalized engagement to motivate them on their health journey, with 65% of patients agreeing that communication from providers makes them want to do more to improve their health.

At the same time, 80% of consumers prefer to use digital channels (online messaging, virtual appointments, text, etc…) to communicate with healthcare providers at least some of the time. This points to significant opportunities for digital tools to help providers and patients manage the healthcare experience.

Filling in Gaps: AI Use Cases for Healthcare

Healthcare will always need skilled, highly trained experts to deliver high quality care. But, AI can fill in some gaps by addressing staffing shortages, easing workflows, and improving communication. Many healthcare executives also believe AI can provide a full return on investment in less than three years.

Here are some ways AI can support healthcare consumers and providers to improve patients’ outcomes and experiences.

Streamline basic communications

Using AI as the first line to a patient for basic information enables convenient, personalized service without tying up staff resources. With tools like text-based messaging, chatbots, and automated tasks, providers can communicate with people on the devices, and at the times, that they prefer.

Examples include:

- Scheduling appointments

- Sending appointment reminders

- Answering insurance questions

- Following up on a speciality referral

Remove barriers to access

AI algorithms are being used in some settings to conduct initial interviews that help patients determine whether they need to see a live, medical professional — and then send them to the right provider.

AI can offer a bridge for patients who, for a host of reasons, are stuck in taking the first step. For instance, having the first touchpoint as a chatbot helps overcome a barrier for patients seeking care within often-stigmatized specialities, such as behavioral health. It can also minimize time wasted at the point of care communicating things like address changes and insurance providers.

Reduce no-show rates

In the U.S., patient no-show rates range from 5.5 to 50%, depending on the location and type of practice. Missed appointments not only result in lost revenue and operational inefficiencies for health systems, they can also delay preventive care, increase readmissions, and harm long-term outcomes for patients.

AI-driven communications help ensure that patients receive critical reminders at optimal times, mitigating these risks. For instance:

- Text-based procedure prep. Automated, time-specific reminders sent to patients prior to a procedure can ensure they correctly follow instructions — and remember to show up

- Post-procedure support. Chatbots can deliver post-op care instructions or follow-up visit reminders, with a phone number to call if things don’t improve

Close information gaps

Imagine a patient at home, alone, not feeling well, and confused about how to take their medication or how to handle post-operative care. Not having that critical information can lead to poor outcomes, including readmission.

Delivering information at the right time, in the right place, is key. But multiple issues can arise, such as:

- A patient needs help outside normal business hours

- Different care team members provide conflicting instructions

- An understaffed care team is unable to return a patient’s call in a timely manner

By providing consistent, accurate, and timely information, AI-enabled tools can provide critical support for patients and care teams.

Minimize staff burnout

Burnout and low morale have contributed to severe staffing shortages in the US healthcare system. The result is an increase in negative patient outcomes, in addition to massive hikes in labor costs for hospitals and health systems.

AI can help lighten the burden on healthcare employees through automated touchpoints in the patient journey, such as self-scheduling platforms or FAQ-answering chatbots. AI can even perform triage informed by machine learning, helping streamline the intake process and getting patients the right care as quickly as possible.

This frees up staff to focus on more meaningful downstream conversations between patients and care teams. It can also reduce phone center wait times for those patients (often seniors) who still rely on phone calls with live staff members.

Maximize staff resources

When 80% of healthcare consumers are willing to switch providers for convenience factors alone, it’s crucial to communicate with patients through their preferred channels. Some people respond to asynchronous requests (such as scheduling confirmations) late at night, while others must speak to a live staff member during the day.

Using multimodal communication channels (phone, text, email, web) offers two major benefits for healthcare providers. For one, you can better engage patients who prefer asynchronous communication. You can also identify the ratio of patients who prefer live calls and staff accordingly when it’s needed most.

Leverage customer feedback

AI provides fast, seamless avenues to gather and track patient satisfaction data and create a reliable, continual customer feedback loop. Tools like chatbots and text messaging expand the number of ways patients can communicate with healthcare providers, making it easier to leave feedback and driving not only a better digital customer experience but potentially leading to better satisfaction scores that may impact payment or quality scores.

AI offers another benefit, too: the ability to identify and respond more quickly to negative feedback. The more swiftly a problem is resolved, the better the consumer experience.

A Few Tips for Getting Started

First, find a trusted technology partner who has experience with healthcare IT stacks and understands how AI fits into the landscape. The healthcare industry is distinctly different from other verticals that might use tools like chatbots and automated tasks. You need a partner who’s familiar with the nuances of the healthcare consumer experience and regulatory compliance requirements.

Next, start small. It’s best to choose your first AI applications in a strategic, coordinated manner. One approach is to identify the biggest bottlenecks for care teams and/or patients, then assess which areas present the lowest risk to the customer experience and the greatest chance of operational success.

Finally, track the progress of your first implementation. Evaluate, iterate, evaluate again, and then expand into other areas when you’re comfortable with the results.

Focal points for iteration:

- Standardize and optimize scripts

- Simplify processes for both patients and staff

- Learn the pathways that users take to accomplish tasks

- Monitor feedback and make improvements as needed

Above all, remember that successful use of AI isn’t just about how well you implement the technology. It’s about the impact those digital tools have on improving patient outcomes and increasing patient satisfaction with their healthcare experience.

Interested in exploring the specific ways AI can benefit your care team and patients? We’re here to help! Contact us today.

The circular economy aims to help the environment by reducing waste, mainly by keeping goods and services in circulation for as long as possible. Unlike the traditional linear economy, in which things are produced, consumed, and then discarded, a circular economy ensures that resources are shared, repaired, reused, and recycled, over and over.

What does this have to do with your digital platform? In a nutshell: everything.

From tackling climate change to creating more resilient markets, the circular economy is a systems-level solution for global environmental and economic issues. By building digital platforms for the circular economy, your business will be better prepared for whatever the future brings.

The Circular Economy isn’t Coming. It’s Here.

With environmental challenges growing day by day, businesses all over the world are going circular. Here are a few examples:

- Target plans for 100% of its branded products to last longer, be easier to repair or recycle, and be made from materials that are regenerative, recyclable, or sustainably sourced.

- Trove’s ecommerce platform lets companies buy back and resell their own products. This extends each products’ use cycle, lowering the environmental and social cost per item.

- Renault is increasing the life of its vehicle parts by restoring old engine parts. This limits waste, prolongs the life of older cars, and reduces emissions from manufacturing.

One area where nearly every business could adopt a circular model is the creation and use of digital platforms. The process of building websites and apps, along with their use over time, consumes precious resources (both people and energy). That’s why Oomph joined 1% For the Planet earlier this year. Our membership reflects our commitment to do more collective good — and to hold ourselves accountable for our collective impact on the environment.

But, we’re not just donating profits to environmental causes. We’re helping companies build sustainable digital platforms for the circular economy.

Curious about your platform’s environmental impact? Enter your URL into this tool to get an estimate of your digital platform’s carbon footprint.

Changing Your Platform From Linear to Circular

If protecting the environment and promoting sustainability is a priority for your business, it’s time to change the way you build and operate your websites and apps. Here’s what switching to a platform for the circular economy could look like.

From a linear mindset…

When building new sites or apps, many companies fail to focus on longevity or performance. Within just a few years, their platforms become obsolete, either as a result of business changes or a desire to keep up with rapidly evolving technologies.

So, every few years, they have to start all over again — with all the associated resource costs of building a new platform and migrating content from the old one.

Platforms that aren’t built with performance in mind tend to waste a ton of energy (and money) in their daily operation. As these platforms grow in complexity and slow down in performance, one unfortunate solution is to just increase computing power. That means you need new hardware to power the computing cycles, which leads to more e-waste, more mining for metals and more pollution from manufacturing, and more electricity to power the entire supply chain.

Enter the circular economy.

…to a circular approach.

Building a platform for the circular economy is about reducing harmful impacts and wasteful resource use, and increasing the longevity of systems and components. There are three main areas you can address:

1. Design out waste and pollution from the start.

At Oomph, we begin every project with a thorough and thoughtful discovery process that gets to the heart of what we’re building, and why. By identifying what your business truly needs in a platform — today and potentially tomorrow — you’ll minimize the need to rebuild again later.

It’s also crucial to build efficiencies into your backend code. Clean, efficient code makes things load faster and run more quickly, with fewer energy cycles required per output.